14 Ago 2024 SharePoint Lens – An AI Indexer, Browser and RAG Chatbot for SharePoint

Welcome to SharePoint Lens, the new way to get a more efficient SharePoint experience. By leveraging document tagging, a Power BI interface and a chatbot for Q&A over the documents, you can streamline your SharePoint interaction and knowledge extraction, helping you to find what you need faster and more easily, boosting productivity.

SharePoint Lens is the AI-enabled evolution of our Advanced SharePoint Indexer solution which we’ve discussed in previous blog posts. Before we delve into SharePoint Lens, let’s first summarise the Advanced SharePoint Indexer solution and see how it has evolved. As we explained in our Creating an Advanced SharePoint Indexer to Maintain an Organised Common Document Repository article, the first version of the Advanced SharePoint Indexer allowed you to both tag documents stored in SharePoint (using SharePoint columns) and also, via a Power BI application, to efficiently locate and access the tagged documents without having to browse tediously through numerous SharePoint folders.

In our follow-up article, Updating and Enhancing our Advanced SharePoint Indexer, we explained how the backend of the Advanced SharePoint Indexer was refactored. The primary goal was to streamline the ETL process and reduce costs by harnessing new features from the Microsoft Power Platform.

Here at ClearPeaks we have been using the Advanced SharePoint Indexer and its evolutions, which we internally refer to as InnoHub, for several years now, to efficiently index and browse our own SharePoint content and to be able to find documents faster.

Recently, with the rise of GenAI, we explored how to further improve the user experience with this new technology, and we saw the possibilities for advancements in three key areas:

- Automated Document Summaries: By automatically generating and storing summaries of the SharePoint documents using LLMs, users would spend less time searching for the required document. Sometimes document names or their locations are not representative of their content or level of detail, so previously a user might have had to access and browse through several before finding the one they need. Now, to improve this, document summaries are automatically generated using AI for each SharePoint document.

- AI Chatbot Interface: AI lets users question the documents, their content and the summaries in a more natural manner, so we implemented a Retrieval-Augmented Generation (RAG) chatbot. For more information on RAG chatbots, please read our recent blog post Building a RAG LLM Chatbot Using Cloudera Machine Learning.

- SharePoint Tagging Accuracy: By ensuring high data quality standards on SharePoint tags, we improved user experience and adoption. As SharePoint tags are set manually by employees, they are prone to contain errors, and errors in the metadata (in our case department, industry, customer name, employee, technology, etc.) are not acceptable if we want a trustworthy document indexer. To solve this, we need to set up a system to automatically generate tags for each document and to compare them with the manually set tags. Discrepancies can then be flagged for review. This feature is under development and so is not included in this article.

A few months ago, we started working on the above areas, evolving our Advanced SharePoint Indexer into the SharePoint Lens, an AI indexer and browser for SharePoint that includes a RAG chatbot. Today, it is already of great help to our organisation, and it can also be used in yours!

The following video shows a quick demo with a focus on the new chatbot interface on our own SharePoint Lens instance (InnoHub). In the demo, we prompt the chatbot via its UI to retrieve internal company information (note that some details are masked for confidentiality reasons):

As you can see in the video, thanks to the automated document summaries, the user can get an overview of the current knowledge of our company on any subject, without needing to access all the relevant documents individually. Additionally, a URL is provided for each document, guaranteeing quick access to the SharePoint file.

While traditional SharePoint solutions calculate KPIs from the content metadata (most accessed documents, top users, most searched documents, etc.), SharePoint Lens provides insights about the actual content of the documents. SharePoint Lens is designed as a plug-and-play solution, making it easy to port to other corporate SharePoint libraries. As there is no need to retrain a Large Language Model, the cost and complexity of the solution are low, making it a great option for gaining visibility of the content stored in SharePoint and leveraging it effectively. We’ll look at this in more detail later.

Now let’s dive into the technical details of implementing the automated document summaries and the AI chatbot interface implementation mentioned above using Azure services.

Automated Document Summaries

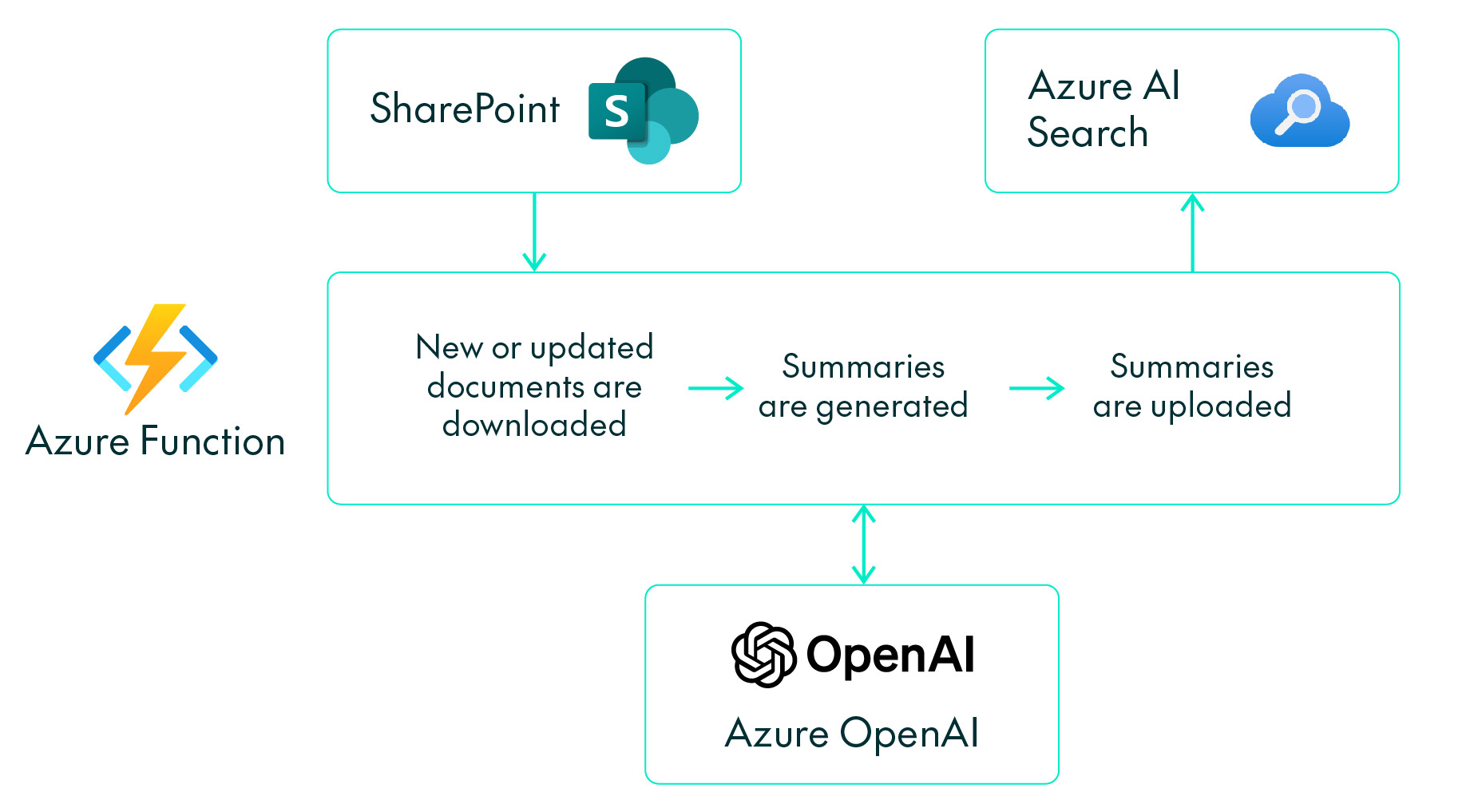

First let’s explain how to automatically generate SharePoint document summaries. We developed an application that does the following:

- A periodic routine identifies new or updated SharePoint documents that need to be summarised.

- The application downloads the corresponding documents from SharePoint, and using GPT-4o, generates a summary of around 100-150 words for each of them.

- The generated summaries are then stored in a search engine for later consumption.

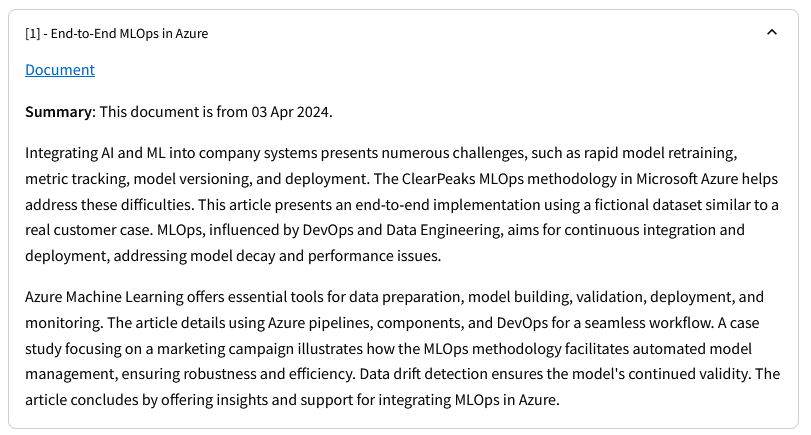

The following picture shows an example of a ClearPeaks blog summary shown in the SharePoint Lens application:

Figure 1: Example of a ClearPeaks blog summary shown in the SharePoint Lens application

To implement this application, we used the following Azure services:

- An Azure Function in charge of the daily running of the logic.

- Azure AI Search (previously Azure Cognitive Search) to implement the search engine.

- Azure OpenAI on GPT-4o as the Large Language Model.

The following schema depicts the automated summary generation:

Figure 2: Process to generate the summaries

AI Chatbot

AI Chatbot Backend

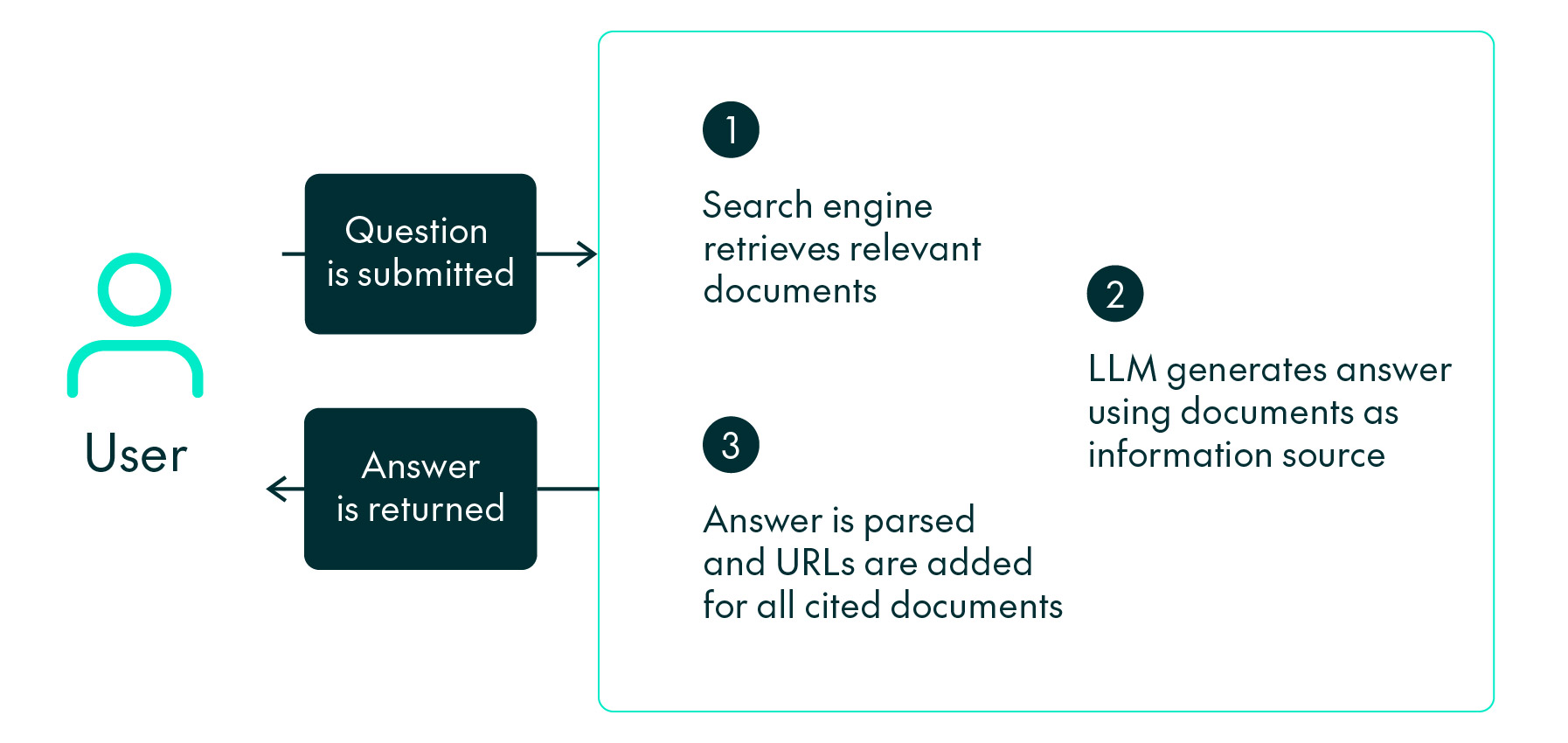

The goal of the SharePoint Lens chatbot is to answer questions using information that isn’t publicly available on the internet, ensuring it cannot be used as training data for commercial Large Language Models. Consequently, the architecture follows the Retrieval Augmented Generation (RAG) framework, which combines a Large Language Model like GPT-4o with a search engine where the corporate data is stored.

Figure 3: Main steps on how answers are generated

Let’s start with a simple question such as “What projects have we done in relation to technology X?”. To get the answer, we first need to identify what corporate data is relevant to this query, and in this case the corporate data corresponds to the automated summaries generated in the previous section, which we stored in the Azure AI Search service.

This service uses, amongst other metrics, the semantic similarity between the user’s query and the indexed documents to determine relevancy, and even if the words written by the user are not an exact match to the words in the documents, they will still show up as relevant because the underlying meaning of the sentence is similar. This search technique, known as “semantic search”, has gained a lot of traction recently due to the rising popularity of AI chatbots like this one.

Once we have a list of documents that could answer the question, we prepare a longer prompt to send to the Azure OpenAI service, including:

- The user-submitted question.

- The top 20 search results we got from the search engine.

- Instructions to answer using only those search results as sources of information.

- Instructions to cite all documents used to generate the answer.

- Examples of what a correct answer looks like, so the generated answer follows the same format.

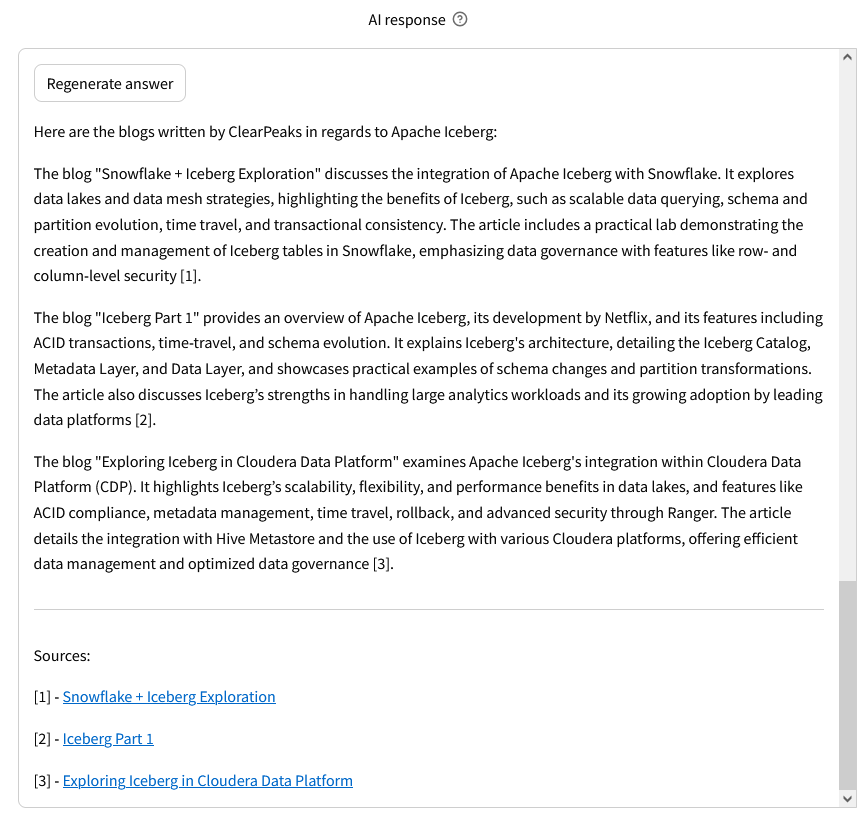

We now submit the prompt to GPT-4o and get the generated answer back. In the picture below you can see an answer shown to the user:

Figure 4: Example of an answer when asked which blogs we have written about Apache Iceberg

The main advantages of this setup are:

- Using RAG architectures means you don’t have to retrain an LLM with personal documents, leading to significant cost savings and improved accuracy. Fine-tuning LLMs to memorise entire documents verbatim is not reliable enough.

- It lets us prompt the LLM to add citations when generating answers. We can add an identifier to each search result, and then request that the results are cited using those identifiers wherever relevant. This is not possible with fine-tuned LLMs.

- LLMs can only handle a limited amount of text at once, known as the context length or context window, which restricts the number of documents we can provide to GPT-4o when answering a question. So using summaries created for each document is very convenient, allowing us to include up to ten times as many corporate documents when generating answers, making the context more complete and ensuring that important information is not missed due to limited search

AI Chatbot Web Interface

For the web user interface (UI), we decided to host a StreamLit application on an Azure Web App service. StreamLit is an open-source Python tool that enables the creation of interactive applications with minimal effort.

To provide an intuitive user experience, the web UI was designed as follows:

- We split the UI into two vertical panels. In the left panel you can see the search results you got for your query, each corresponding to a SharePoint document along with its summary, and in the right panel you’ve got the answer generated by GPT-4o.

- Below these two panels, there’s an option to ask follow-up questions in case you need more clarification or want to reformat the answer in a different structure. The method for generating this follow-up response is identical to the initial response, although it does not carry out the search again.

- Citation markers are added at the end of each mention as a number between two brackets [1].

For each citation, there’s a hyperlink at the end of the response so that you can directly access the original documents stored in SharePoint.

Figure 5: Example of a follow-up request asking for a shorter response

Conclusions

Before wrapping up this blog post, there are a couple of technical considerations we should mention:

- Remember that as we are using summaries of documents instead of the complete documents themselves, there is obviously the risk of details being lost in the process. To mitigate this risk, we will incorporate an option into the web interface allowing users to choose between using a large number of summarised documents or fewer complete documents to generate an answer, based on their needs.

- The development of the solution started with GPT-3.5-Turbo due to its lower API usage costs. However, we saw that it was not capable enough to generate correct answers and often mixed up topics and hallucinated, so we switched to GPT-4o and got much better results that met our expectations. That said, the LLM used can be changed and adapted to each case. There are LLMs that are better than others in certain areas, so depending on the content in SharePoint we can help you to identify which LLM is best for you.

In this blog post we’ve presented SharePoint Lens, an AI indexer, browser and RAG chatbot for SharePoint. We’ve seen how using chat assistants when dealing with document stores in corporate libraries like SharePoint can speed up the location and management of documents, and we’ve also seen how automatic summarisation can significantly save time.

The UI and overall SharePoint Lens application are in constant development, and we’re planning to add new features and improvements, like filtering documents by tags. In a future blog post, we will show you how the accuracy of SharePoint tags is enhanced by the AI system’s ability to generate tags.

We hope this article has provided you with some valuable insights into how all of this works behind the scenes, and what types of services are required to run it. If you need to improve your SharePoint interaction experience, or if you need help implementing AI use-cases, or developing AI chatbots in Azure or other tech stacks such as Snowflake, Cloudera, AWS, etc., don’t hesitate to contact our team of AI experts.