11 Jul 2024 Ingesting SAP Data with NiFi And CDP

A common requirement in today’s business world is to move data generated by various tools to a single location (a data warehouse, a data lake, or more recently, a data lakehouse) for reporting and analysis. This is due to the constantly growing number of tools and technologies that companies can adopt across their various verticals.

Today, one of the most popular solutions for moving data across different systems is Apache NiFi. We’ve already talked about NiFi extensively in some of our previous blog posts: for example, how to connect to ActiveMQ, how to perform Change Data Capture (CDC) with NiFi, the Health Monitoring of NiFi Flows, how to expose REST APIs with NiFi, or how to use MiNiFi to build an IoT ecosystem.

NiFi’s potential is virtually unlimited, so even if a certain connector is missing, there are still different options to ensure we can fetch and push data from and to a particular technology. For example, we can write custom processors, or leverage other processors to do the same job. This is the case we will be exploring today in this post, in which we will show you how to connect to, read from and write data back to SAP.

As you may already know, SAP is a leading Enterprise Resource Planning (ERP) software system. It provides organisations with a comprehensive suite of applications to manage various business processes, including finance, human resources, the supply chain, manufacturing, and more.

Within the SAP ecosystem, various entities and components such as Tables, Remote Function Calls (RFCs), Business Application Programming Interfaces (BAPIs), Views, and Classes contribute to the overall functionality of the ERP system. However, it is common for enterprises and organisations to need to extract this data from the SAP ecosystem, to manage and analyse it in their data analytics platform, alongside data from other applications and systems. This can be done, for example, by using the Cloudera Data Platform (CDP).

And this is where NiFi comes into play! NiFi is widely available in various CDP form factors, including private and public cloud environments. It serves as an ideal solution for ingesting SAP data and making it available for a range of analytical, BI, and AI purposes.

In this blog post, we will look at ingesting these SAP entities into CDP:

- Tables

- Views

- RFCs

- BAPIs

- Class Methods

And what’s more, we will show you how to write back to SAP.

CDATA

NiFi does not ship with a processor to directly pull data from SAP; however, it supports many third-party connectors for many different systems. In our case, to facilitate the migration of these SAP entities into CDP, we have leveraged a CDATA driver.

CDATA is a company specialising in creating connectors and drivers between different systems. We used the SAP ERP Driver, described here: Bridge SAP Connectivity with Apache NiFi (cdata.com).

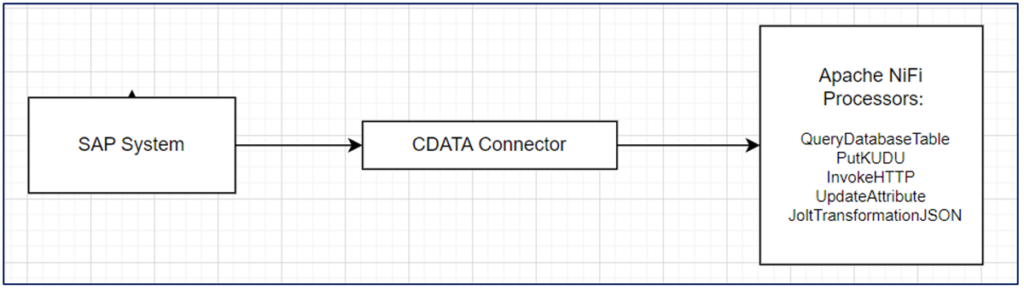

The CDATA driver uses its inbuilt SQL engine to process SQL operations and JOIN operators, acting as a bridge between SAP and NiFi, and enabling seamless data transfer and synchronisation between the two environments:

Figure 1: Representation of SAP-NiFi connection using the CDATA driver

DBCPConnectionPool Service Configuration

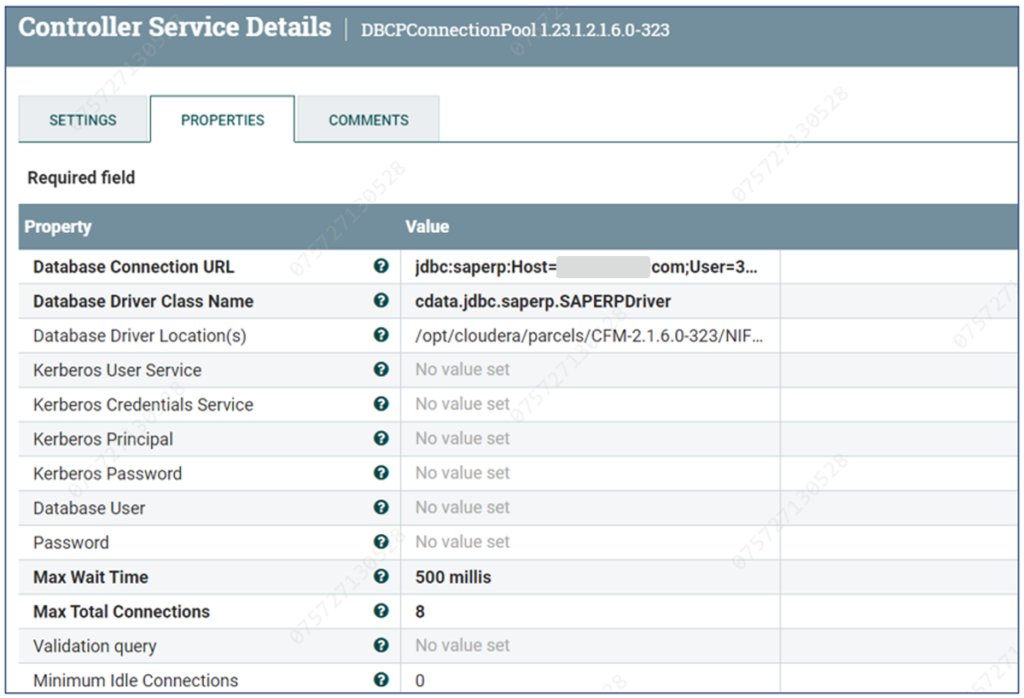

To utilise the CDATA connector in Cloudera Flow Management (CFM) powered by NiFi, the creation of a DBCPConnectionPool controller service is required. This service acts as a Connection Pool manager, facilitating efficient and reusable connections to the target database via the CDATA driver.

In NiFi, the DBCPConnectionPool controller service is used to establish a pool of database connections. Here is the service’s configuration:

- Database Connection URL: Specifies the URL used to connect to the database.

- Database Driver Class Name: Identifies the Java class used for the database driver.

- Database Driver Location: Indicates the location of the database driver JAR file.

- Additional Properties: Optional properties that may be required to configure the database connection.

- Validation Query: An optional SQL query used to validate the connection.

Figure 2: The DBCPConnectionPool service to connect to SAP

Here are the connection details that we used to create our Connection Pool:

Property | Value |

|---|---|

SAP Connection URL | jdbc:saperp:Host=XXXXXX;User=XXXXXX;Password=XXXXXX;Client=400; SystemNumber=00;Views=view1,view2; |

Database Driver Class name | cdata.jdbc.saperp.SAPERPDriver |

Database Driver Location | /opt/cloudera/parcels/CFM-2.1.6.0-323/NIFI/lib/cdata.jdbc.saperp.jar

|

Note 1: | The view names can be passed separated by a comma. In certain cases, these are required if using the Connection Pool to fetch SAP Views, as we will see later. If not fetching views, they are not required. |

|---|---|

Note 2: | The DB driver location might change, depending on your specific setup and version. |

Note 3: | You must have a license to download and use CDATA drivers. This license file should be placed in the same directory as the other JAR files on the NiFi node. |

Once the Connection Pool is ready, we can use it to connect to and extract the various SAP entities we mentioned.

SAP Ingestion with NiFi

In this section, we’ll describe how to use NiFi to ingest the various SAP entities we mentioned earlier, and how to write back to SAP. Tables and Views are fairly straightforward to ingest, whereas RFCs, BAPIs and Class Methods are different concepts that apply specifically to SAP and require a bit more effort to be pulled correctly.

SAP Table

The most basic SAP entity is the SAP Table. SAP Tables are, as the name implies, tables, organised into fields and records. They hold information related to various business objects and processes.

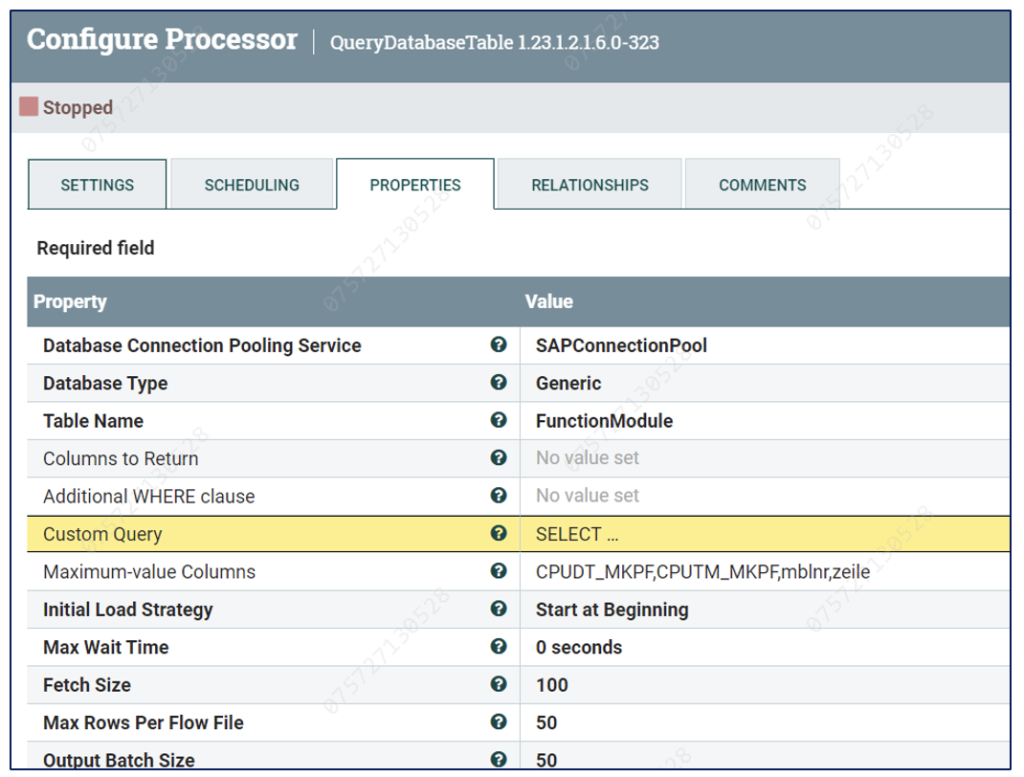

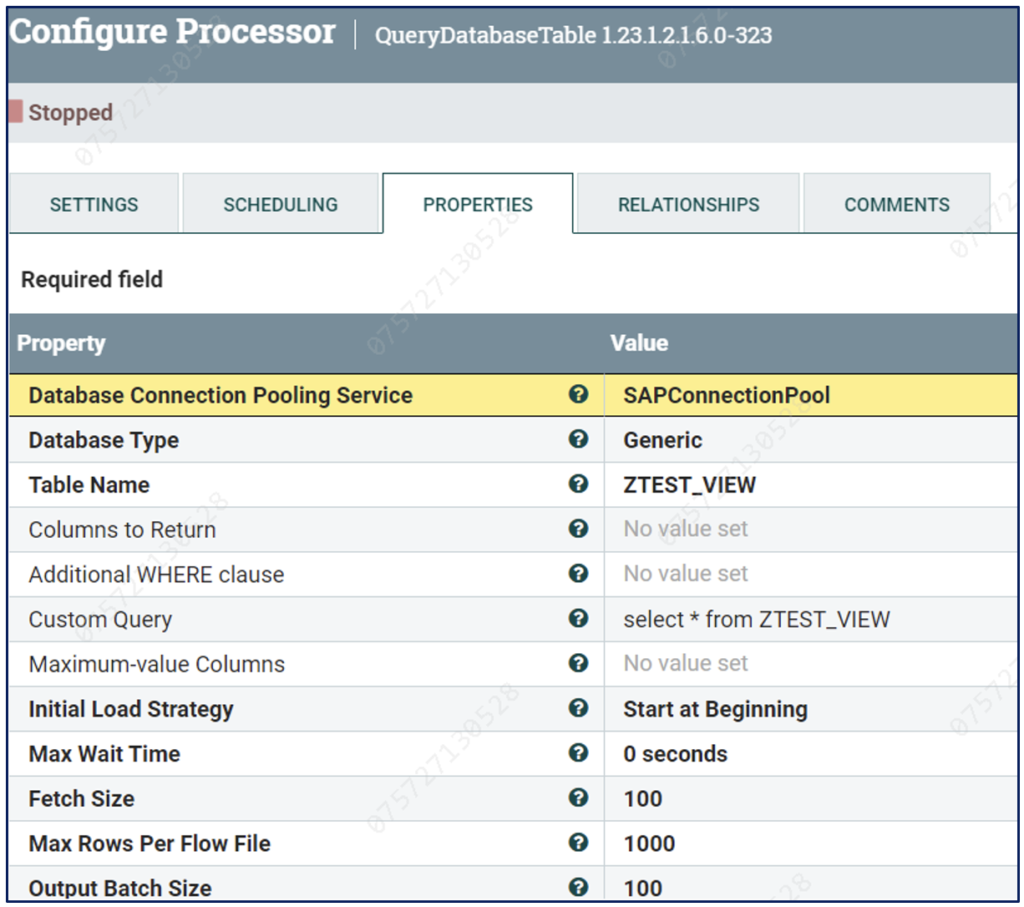

To read from an SAP Table, we use the QueryDatabaseTable processor. Below is a snippet of how we configured the processor, assuming we are querying a Table FunctionModule. Notice how we use the SAPConnectionPool that we created earlier:

Figure 3: How to use QueryDatabaseTable to fetch An SAP Table

Custom Query and Maximum-value Columns can also be used for more fine-tuned queries and behaviours, such as incremental load.

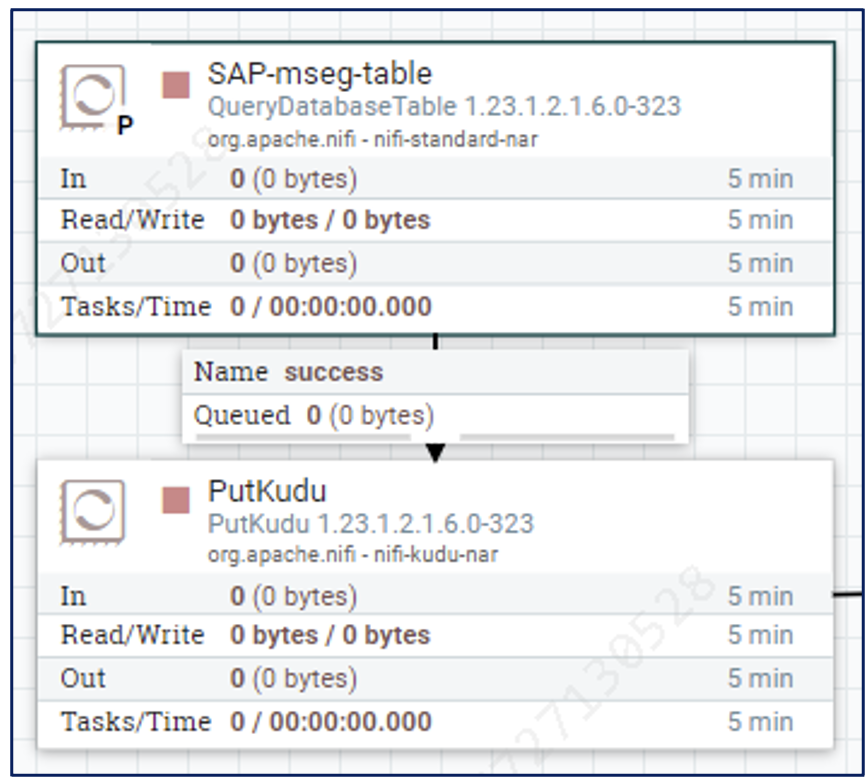

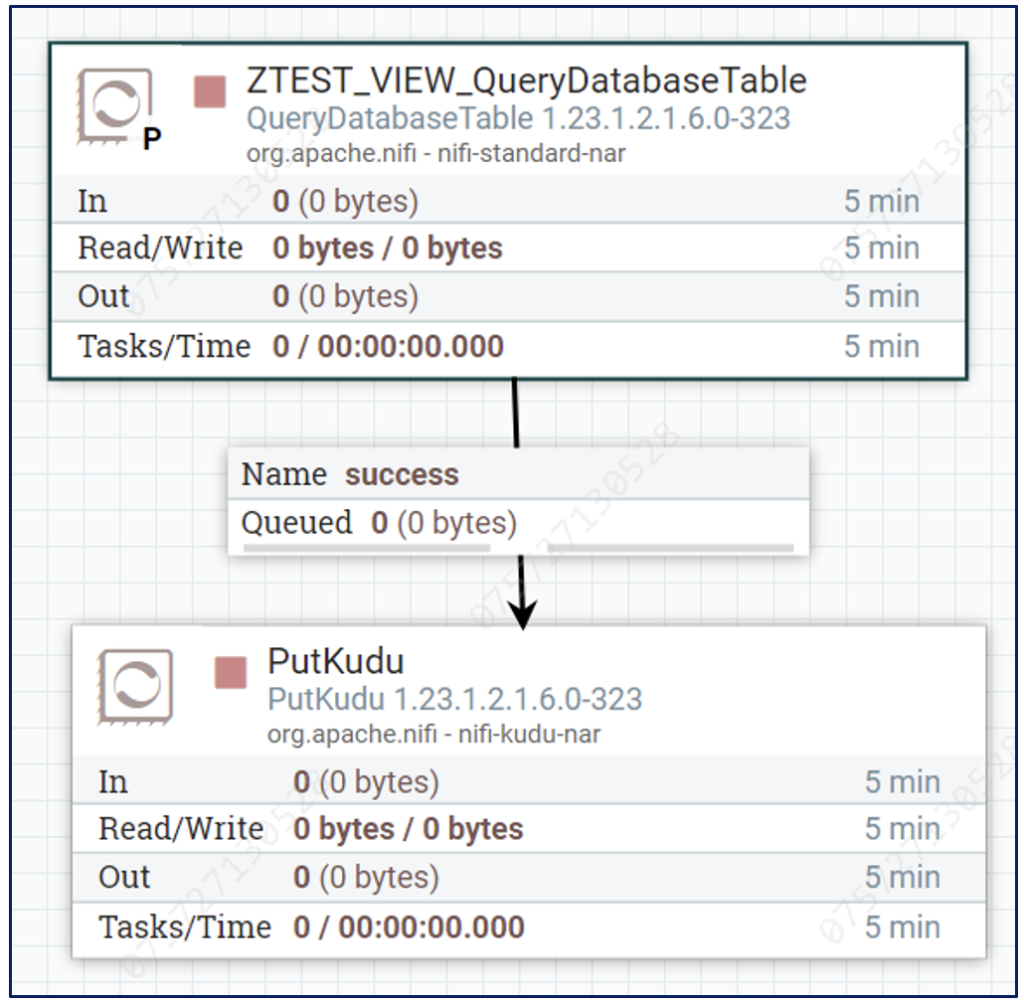

Once the data has been fetched by NiFi, we choose our preferred storage type. In our case, we decided to store data in Kudu, so we used the PutKUDU processor. At this stage, the process functions like any other NiFi pipeline, and the fact that the original source was SAP has no impact on the downstream processor. The final flow looks like this:

Figure 4: Sample flow to ingest SAP Tables data

SAP Views

An SAP View functions like any other typical view. Using the CDATA driver, we can ingest it as if it were a table, so we use the same QueryDatabaseTable processor. Below, you’ll see that we simply specified the View Name in the Table Name field. We can also use the Custom Query fields for more specific queries on the table:

Figure 5: How to use QueryDatabaseTable to fetch An SAP View

Note 4: | Remember that to be able to fetch Views, the Views parameter might be required in the pooling service connection string, as explained earlier. Do this if you get an “Object not found” error when trying to fetch the View. |

|---|

Similarly to the SAP Table, once data has been fetched by NiFi, we can treat it like any other NiFi FlowFile. If we were to ingest it into Kudu, the final pipeline would look like this:

Figure 6: Sample flow to ingest SAP Views data

Remote Function Calls

RFC is a protocol used to communicate between SAP systems or between SAP and external systems. It allows the execution of remote function modules. Typically, you use it by calling a function to which you pass certain input parameters, and based on this combination, it returns a certain set of records.

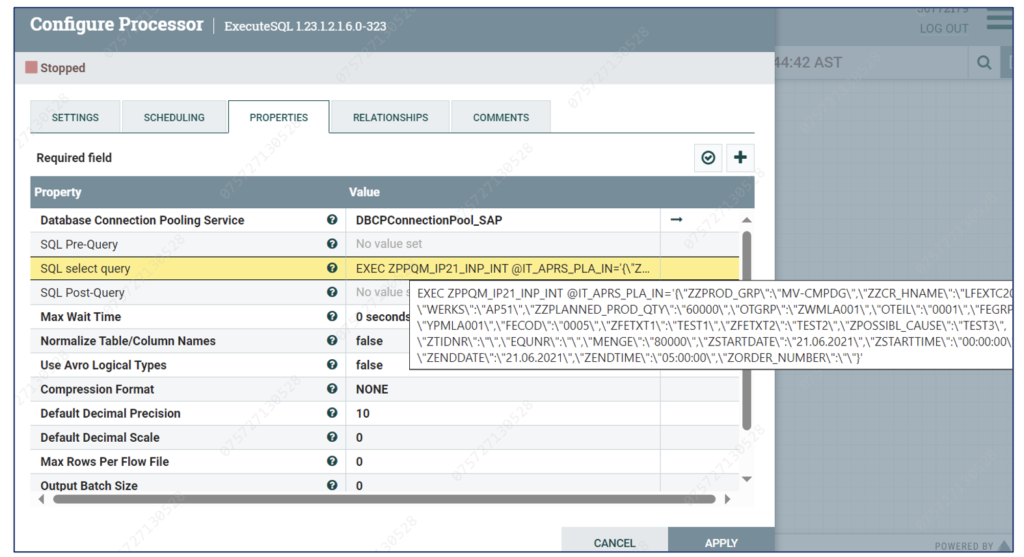

In NiFi, we can use the ExecuteSQL processor to call an SAP RFC function.

Assuming that ZPPQM_IP21_INP_INT is an RFC function that takes IT_APRS_PLA_IN (which contains a set of key-value pairs) as an input parameter, we can use the settings below in the ExecuteSQL processor to call it:

Figure 7: Using ExecuteSQL to call an RFC

Notice how we again use the SAP Connection Pool we created earlier. However, we need to use a special syntax in the SQL select query field to call our function:

Property | Value |

|---|---|

SQL select query | EXEC ZPPQM_IP21_INP_INT @IT_APRS_PLA_IN= |

Below we can see the redacted output that this call generates:

{

"MANDT" : " " ,

"ZZPROD GRP" : " ",

"ZZCR HNAME" : " ",

"WERKS" : " " ,

"ZZSTART_DATE" : ,

"ZZEND DATE" : ,

"QMNUM"' : " ",

"FENUM" : ,

"ZZPLANNED PROD QTY" : ,

"ZITEM VARTANCE" : ,

"ZZACTUAL_PROD_QTY" : ,

"ZZLOST_ PROD_QTY" : ,

"ZVARPER" : ,

"ZGAP_QUANTITY" : ,

"OTGRP" : " ",

"OTEIL" : " ",

"FEGRP" : " ",

"FECOD" : " ",

"ZFETXT1" : " ",

"ZFETXT2": " ",

"ZPOSSIBL CAUSE" : " ",

"ZTIDNR" : " " ,

"EQUNR" : " " ,

"MENGE" : ,

"ZLOSSPER" : ,

"ZSTARTDATE" : " ",

"ZSTARTTIME" : " ",

"ZENDDATE" : " ",

"ZENDTIME" : " ",

"ZORDER NUMBER" : " ",

"CALL FROM" : " ",

Figure 8: RFC Sample output in NiFi

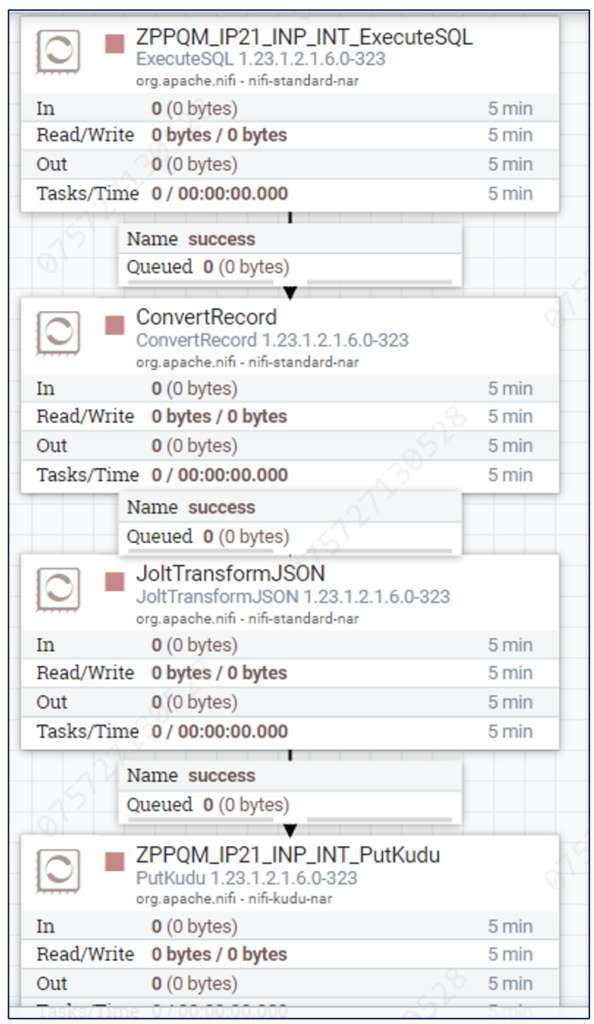

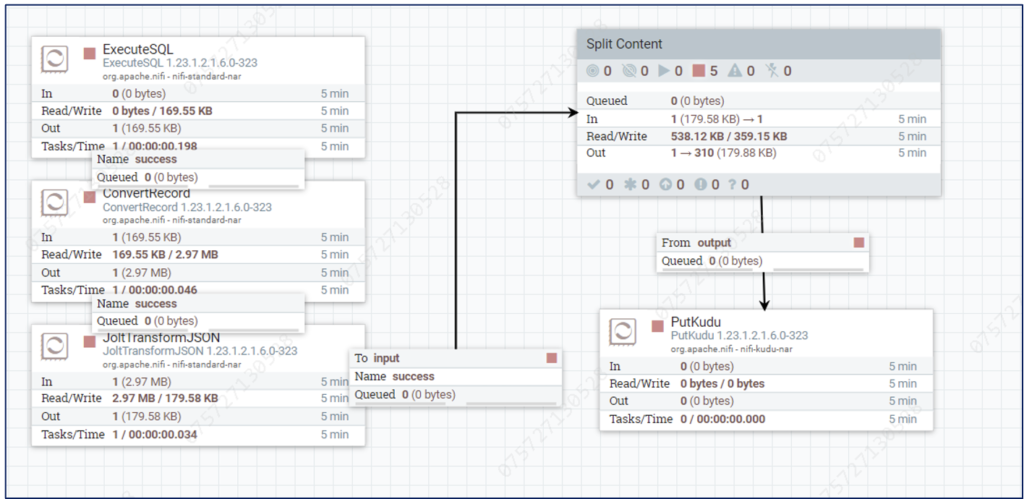

As we can see, for NiFi this is now a standard JSON FlowFile. As before, we can transform it or store it somewhere else. The flow below shows how we convert it and transform it before storing it in Kudu:

Figure 9: Sample flow to ingest an RFC output

BAPI Function

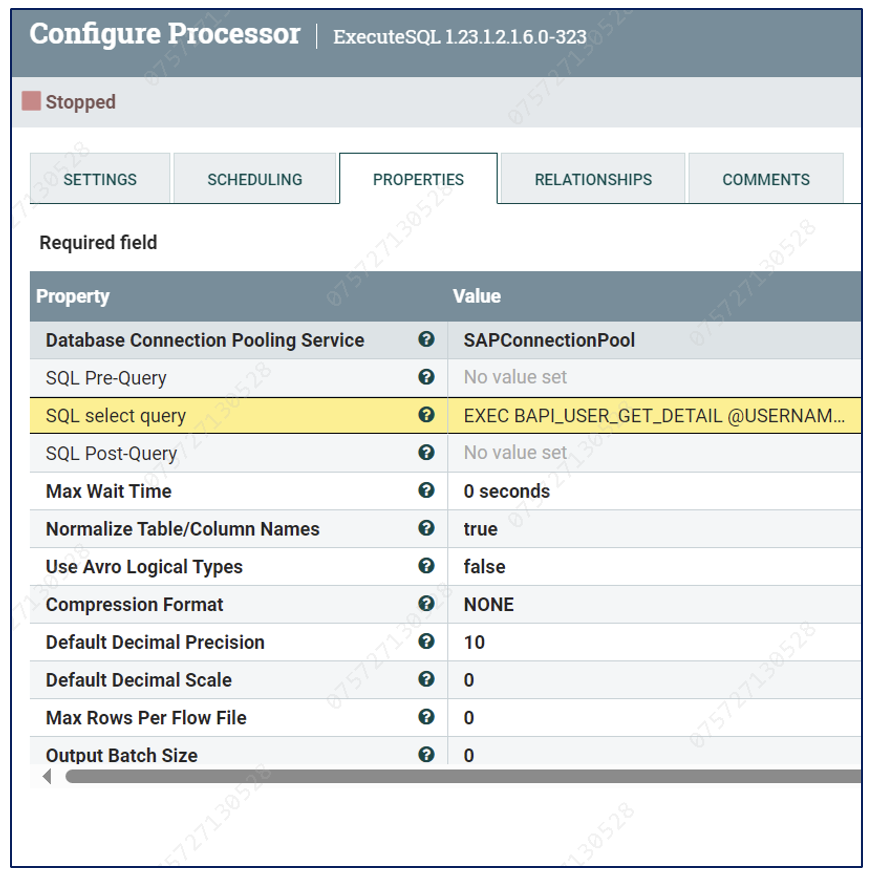

A BAPI provides a standardised way to access business processes and functions within SAP, enabling external applications to interact with SAP systems. From a NiFi standpoint, calling it is very similar to the RFC, and we use the ExecuteSQL processor.

This screenshot shows the processor settings to call a BAPI function called BAPI_USER_GET_DETAIL with two parameters – USERNAME and CACHE_RESULTS:

Figure 10: Using ExecuteSQL to call a BAPI function

Here’s the full SQL Select Query statement:

Property | Value |

|---|---|

Select Query | EXEC BAPI_USER_GET_DETAIL @USERNAME=’DEV_58435′,@CACHE_RESULTS=’X’ |

The following is a redacted BAPI output:

[{

"PERS NO" : " ",

"ADDR'NO" : " ",

"FIRSTNAME" : " ",

"LASTNAME" : " ",

"FULLNAME" : " ",

"TITLE" : " ",

"NAME" : " ",

"CITY" : " ",

"DISTRICT" : " ",

"COUNTRY" : " ",

"COUNTRYISO" : " ",

"LANGUAGE" : " ",

"LANGUAGE ISO" : " ",

"REGION" : " ",

"ADDRESS-SORT1" : " ",

"ADDRESS-SORT2" : " ",

"TIME ZONE" : " ",

"ADDRESS E_MAIL" : " ",

"ANAME" : " ",

"ERDAT" : " ",

"TRDAT" : " ",

"USERALIAS" : " ",

"COMPANY" : " ",

"UUID" : " ",

"WRNG LOGON" : " ",

"LOCAL LOCK" : " ",

"GLOB_LOCK" : " ",

"NO USER_PW" : " ",

"MODDATE" : " ",

"MODTIME" : " ",

"MODIFIER" : " ",

Figure 11: – BAPI sample output in NiFi

Again, for NiFi this is now a simple JSON FlowFile, which can be used in any downstream flow, like the one depicted below:

Figure 12: BSample flow to ingest a BAPI function output

Class Method

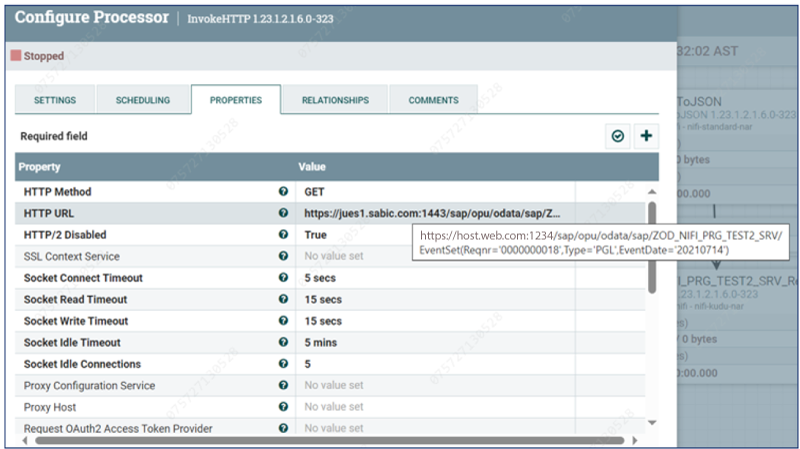

There is no mechanism available in CDATA to call the SAP Class Method. A possible workaround (suggested by CDATA) is to use an ODATA endpoint to call the Class Method. This ODATA endpoint needs to be available, or if needed, created ad hoc.

Assuming the endpoint is available, we can then use the InvokeHTTP processor to call it. This will return the appropriate response corresponding to the method.

Below is the InvokeHTTP we used:

Figure 13: Using InvokeHTTP to invoke a Class Method through an ODATA endpoint

Write Back to SAP

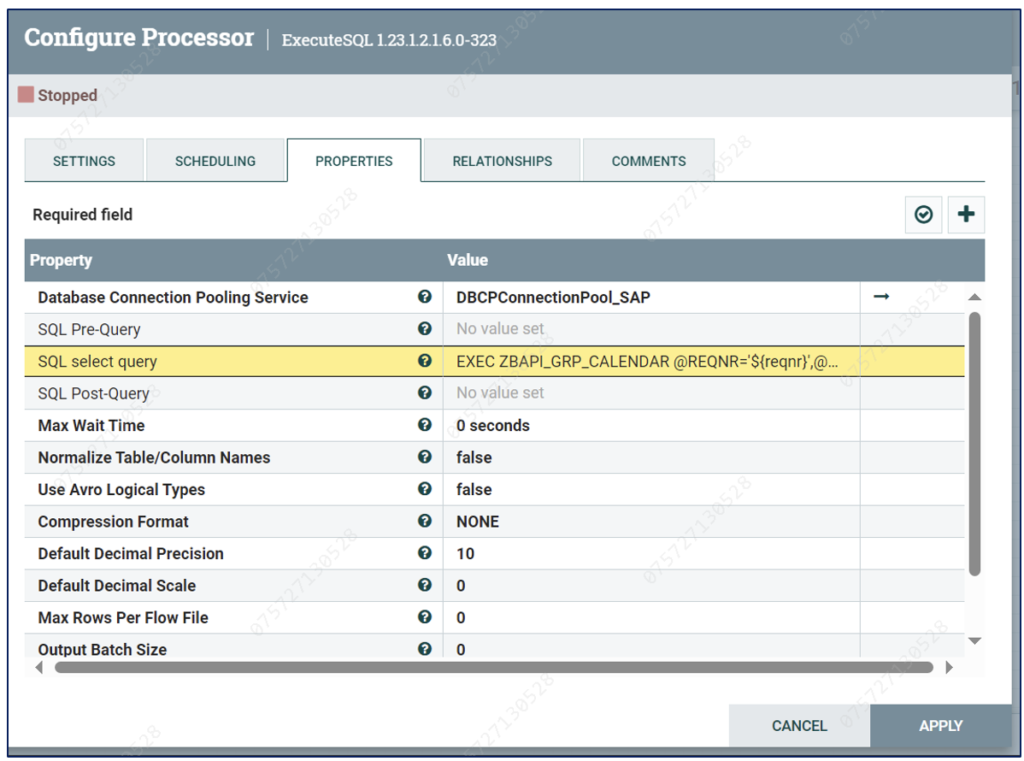

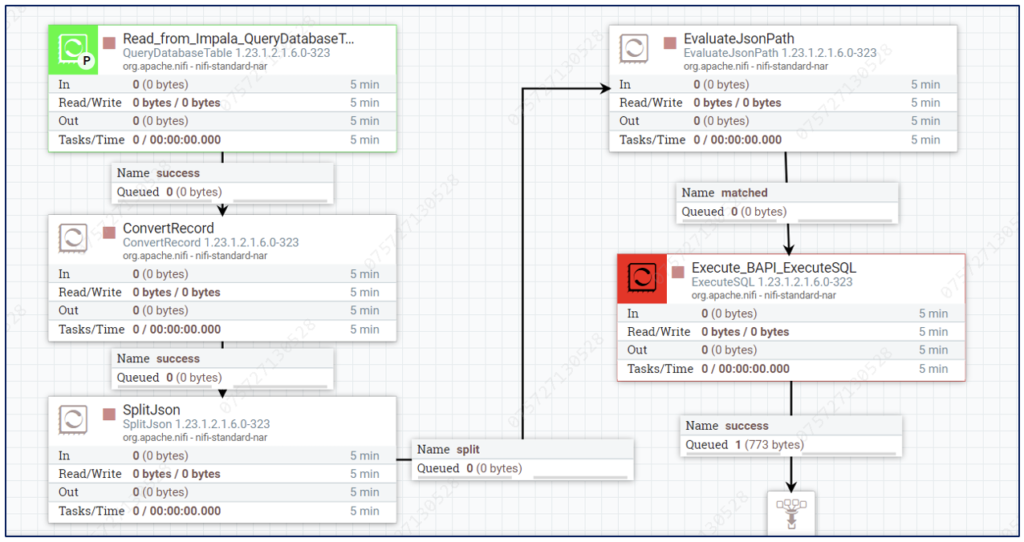

Lastly, let’s see how we can also leverage NiFi to write data back to SAP.

SAP strongly discourages directly making changes to SAP tables. Instead, function modules such as BAPIs should be used to modify table data, so we can use the same ExecuteSQL processor described earlier, but with two main considerations:

- A BAPI function that handles the write to SAP must exist. From NiFi’s standpoint, we call the BAPI just as we did before. However, the fact that this BAPI performs a write operation instead of reading must be managed by the function itself and is transparent to NiFi.

- The input parameters must be explicitly mentioned in our EXEC call, so we must make sure that we use single FlowFiles, with attributes containing the values of their fields. This can easily be done by combining the SplitJson and EvaluateJsonPath

Assuming both these points are true, we can then write back to SAP as shown below:

Figure 14: Using ExecuteSQL to write data back to SAP

Here’s the full query we used. Notice how we have a corresponding attribute in the incoming FlowFile for each record field:

Property | Value |

|---|---|

Query | EXEC ZBAPI_GRP_CALENDAR @REQNR=’${reqnr}’,@EVENT_TYPE=’${event_type}’, |

Assuming our objective is to write back the data that we are reading from Impala (or Kudu) to SAP, the resulting FlowFile would look something like this:

Figure 15: Sample flow to fetch data from Impala and write it back to SAP

Conclusion

In this blog post, we’ve looked at how NiFi‘s powerful capabilities enable smooth integration with SAP entities like Table, Views, RFCs and BAPIs, even when this integration is not available out of the box. To do so, we have used a third-party connector from CDATA.

Alternatively, we can also use Rest APIs (for example, if the client is prohibited from using any third-party connectors). However, to do so, the SAP-ABAP developers must first construct a REST service for each entity. If the endpoints exist, we can use the InvokeHTTP processor, just like we saw earlier.

It is also worth noting that while we mostly used Kudu or Impala as our target/source counterparts, once data has been fetched from SAP (or when we need to write data back to SAP), we can utilise any available system. For example, in the context of CDP, we could use HDFS files, HBase tables, or even Kafka topics! SQL databases, Oracle, and many other systems are also options. The possibilities are endless, thanks to NiFi’s flexibility.

We hope you now have a better understanding of how to incorporate SAP entities into CDP, and of how NiFi can be a solution to pretty much any data movement need.

Please get in touch with us if you have any questions or doubts about the technologies and implementation techniques we’ve presented today, or if you need assistance connecting ERP entities with your NiFi flows or with CDP. Our skilled and experienced consultants will get back to you right away!