01 Feb 2024 How to Choose Your Data Platform – Part 2

This blog post is the follow-up to the first part of this mini-series, where we discussed the evolution of data platform architectures, and also introduced our base assessment to help select the best technological options for an organisation aiming to build a data platform nowadays. Our base assessment additionally identified twenty aspects that a data platform must cover. We recommend that any organisation interested in taking this further reaches out to us so that we can work on a tailored assessment considering their unique requirements.

First of all though, in this second article, we’ll present some of our favourite tech vendors for consideration when choosing your cloud data platform.

We are only looking at cloud technologies, i.e. vendors whose offerings run on public clouds. We should also bear in mind that the options listed can’t really be compared directly with one another because some (Azure, AWS, GCP, and Oracle Cloud) are hyperscalers, i.e. public cloud providers, so they have data-platform-related offerings but also provide many more services which make it easier for them to cover more of the aspects that we identified. The others, at the bottom of the image above (Cloudera, Snowflake, and Databricks) are specialised data platform tech vendors that are very good at certain aspects but are limited in others. Of course, there are numerous other technologies that satisfy some but not all of our requirements, and we chose these seven due to their vision, their market share, and our own experience of them.

In the following sections we will briefly introduce the various options, see what they understand by the term data fabric, and highlight what we liked the most about each of them. For more details, please check out the vendor documentation or, even better, contact us for an accurate, personalised assessment.

Azure

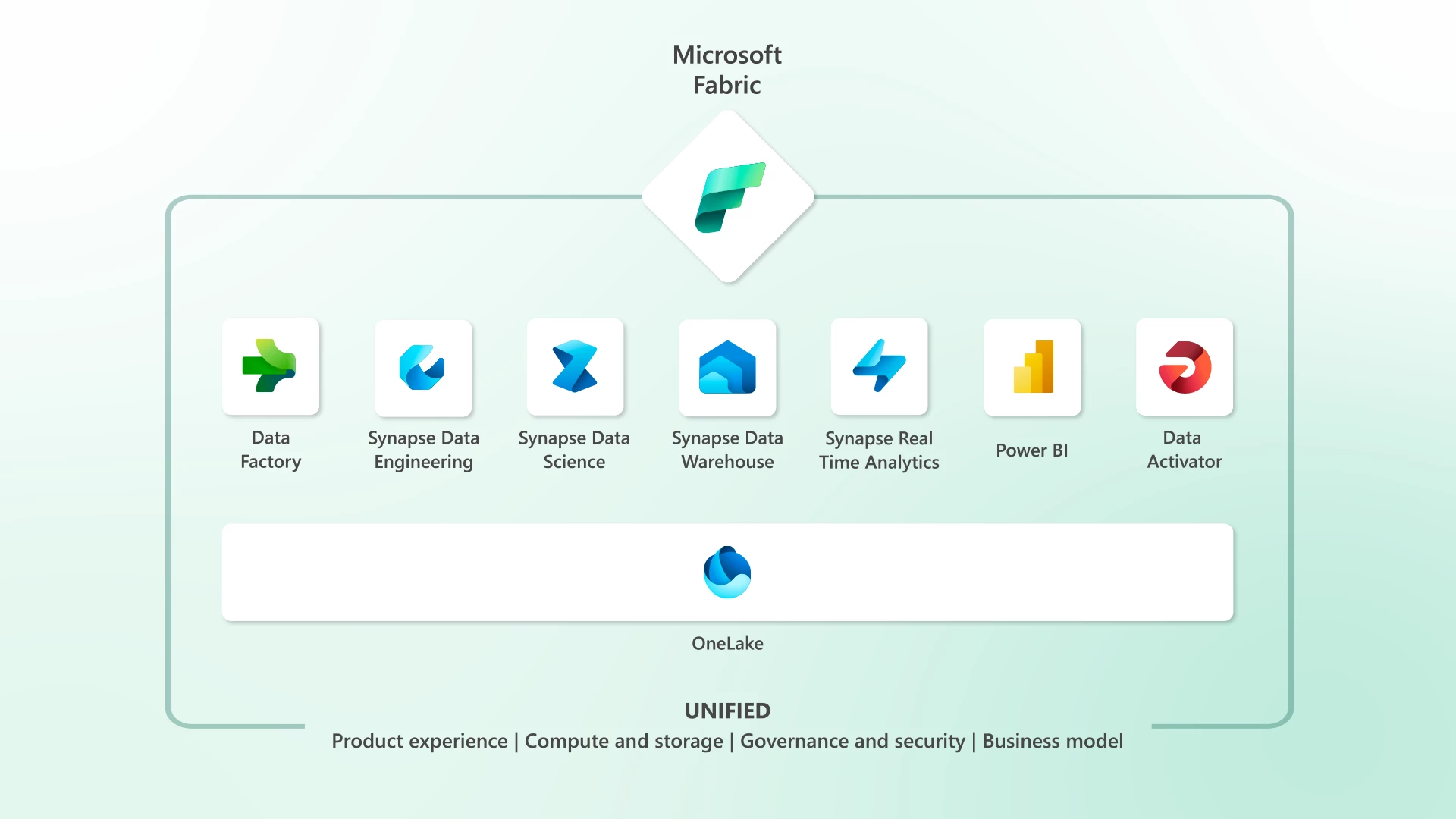

Microsoft Fabric is the new flagship service to build your data platform easily on Azure. It is an all-in-one SaaS (Software as a Service) that provides compute resources for all its components: data ingestion, data orchestration, data engineering, data science, data warehouse, real-time analytics, and data visualisation. All the components are integrated in the same interface, guaranteeing seamless switching from one to another. This interface is also used in Power BI, making Microsoft Fabric easily accessible in Power BI-enabled environments.

As its name indicates, Microsoft is using the term «fabric» for its new flagship product in data analytics, and is one of the tech vendors that has embraced the term more seriously.

In addition to Microsoft’s efforts to streamline its data analytics solutions, the feature we find most impressive is OneLake, which allows all users to access the same data within a single lake, eliminating the need for data movement when transitioning between different experiences.

Furthermore, thanks to Shortcuts, we can create a virtual lake in Fabric, facilitating data unification across various business domains. OneSecurity will also integrate with OneLake, playing a crucial role in data security. Finally, the introduction of Direct Lake mode in Power BI marks a significant advance, enabling a semantic model to serve data directly from OneLake, bypassing the need for querying, importing, or duplicating data. This innovation is a game-changer for rapid and efficient reporting on massive amounts of big data.

AWS

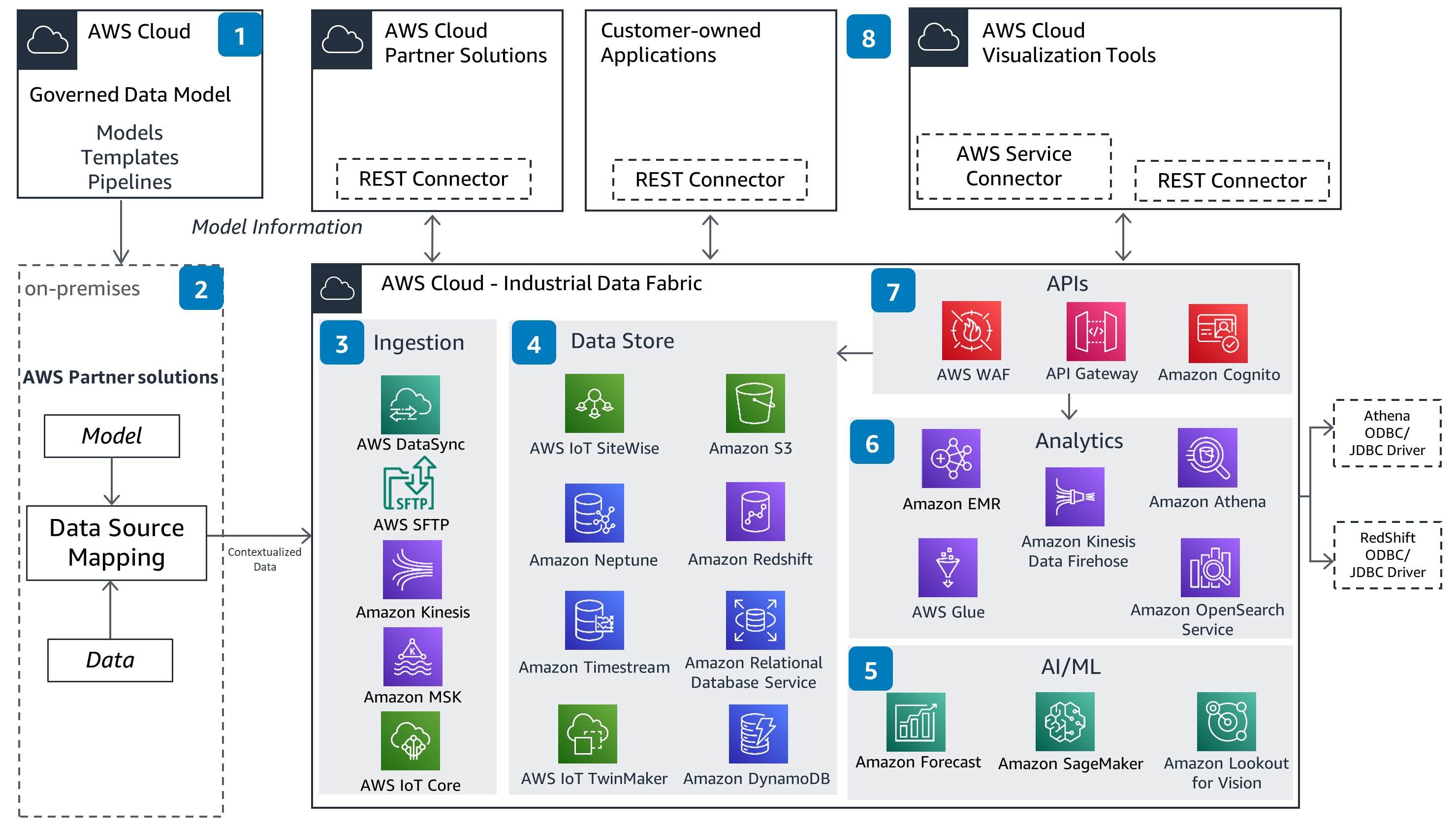

AWS was a trailblazer in the commercialisation of cloud services. It has grown from basic storage and compute services to providing services for data-platform-related areas such as databases, data integration, data lakes and warehousing, AI, IoT, real-time, etc.

While AWS is not yet formally using the term data fabric in its nomenclature, we can find the term in the AWS Solutions Library as seen above: by combining core AWS services, we can design a data fabric.

What we like the most about AWS is the fact that if offers an extensive array of highly configurable and customisable building blocks which provide a wide range of functionalities to address almost all aspects of a data platform.

GCP

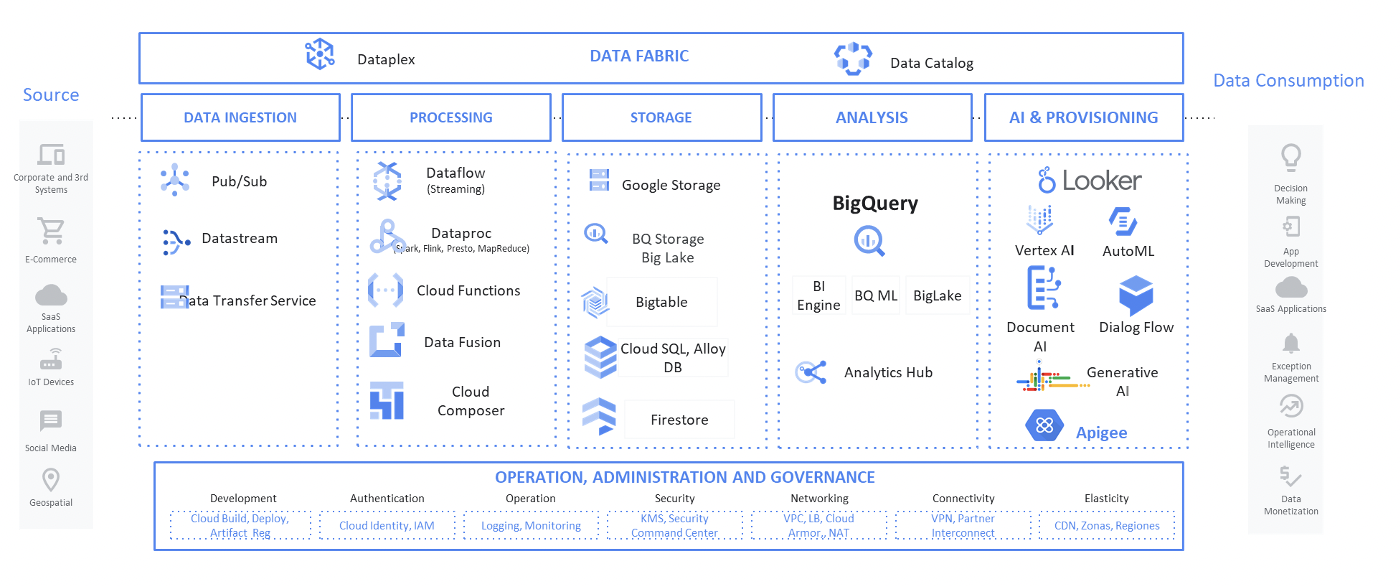

Google Cloud Platform (GCP) made its name as an important data platform contender with BigQuery, its pioneering cloud data warehousing service. However, it has evolved greatly since then and now boasts a set of services to cover all aspects of a data platform, as you can see above.

GCP applies the term data fabric to its Dataplex service which focuses on centralised data governance and security, designed to regulate the use of other data-related services.

GCP has impressed us with its ease of building data platforms, enabling a swift, modest start with the potential to scale up significantly, maintaining both performance and ease of operation. BigQuery continues to be GCP’s cornerstone for data analytics, now complemented by a suite of user-friendly services that address various aspects of a data platform.

Oracle Cloud

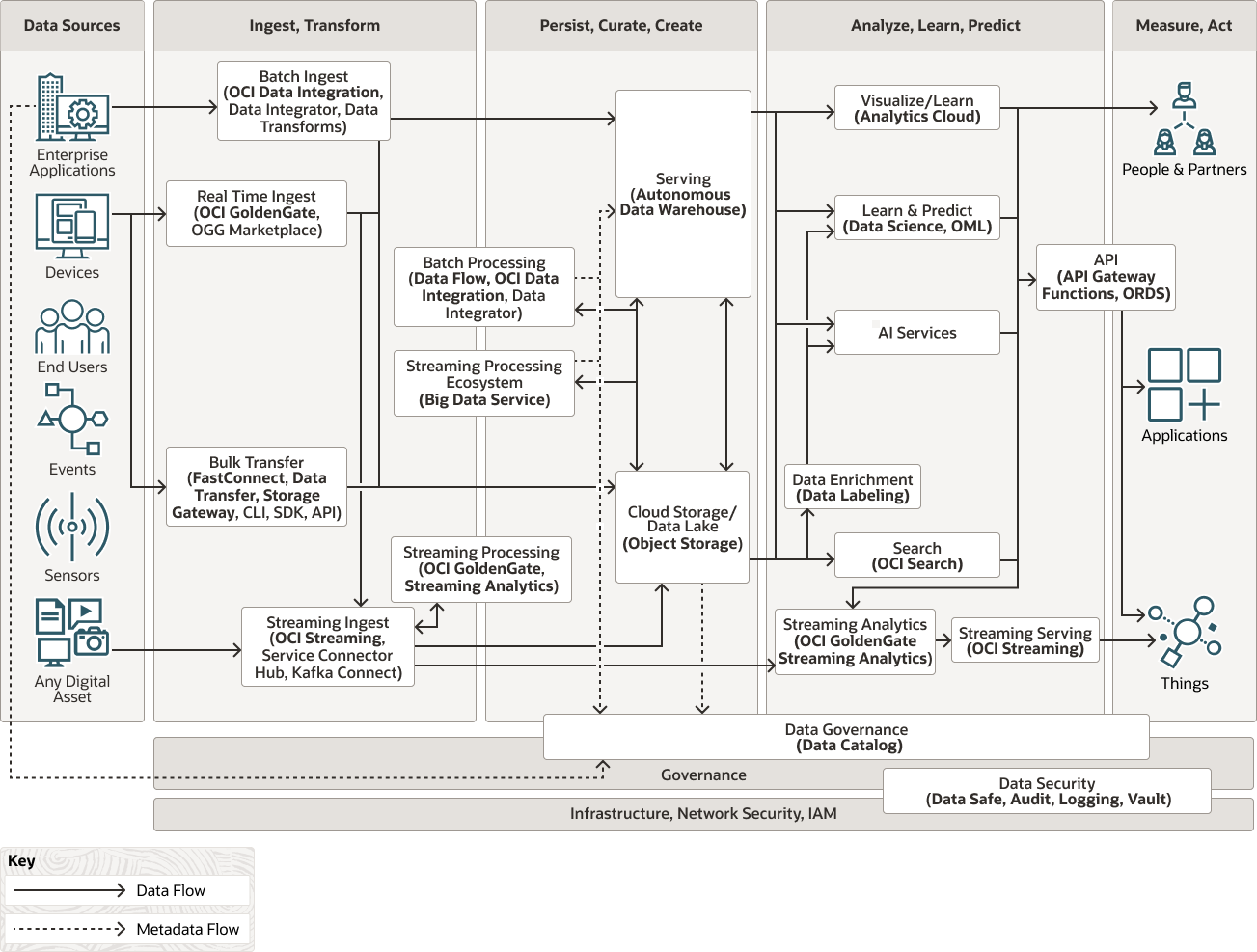

Source: https://docs.oracle.com/es/solutions/data-platform-lakehouse

Oracle Cloud is a cloud computing provider offering not only virtual servers, storage and networking, but also a set of data-platform-related services allowing you to create a robust architecture like the one in the image above, covering aspects from data ingestion (especially good when dealing with sources that are Oracle applications) to AI, including data lakes, databases and data warehouses, data processing, data visualisation, semantic modelling, streaming, data governance, and security.

Although Oracle still uses the term data lakehouse to refer to this robust architecture, it has a broader scope than what other vendors mean by a data lakehouse, and effectively the Oracle option is more like a data fabric.

What we value most about Oracle is its ability to evolve in the data platform space: building on solid foundations like semantic modelling and data warehousing, Oracle now offers nearly everything needed to construct a modern enterprise data platform.

Cloudera

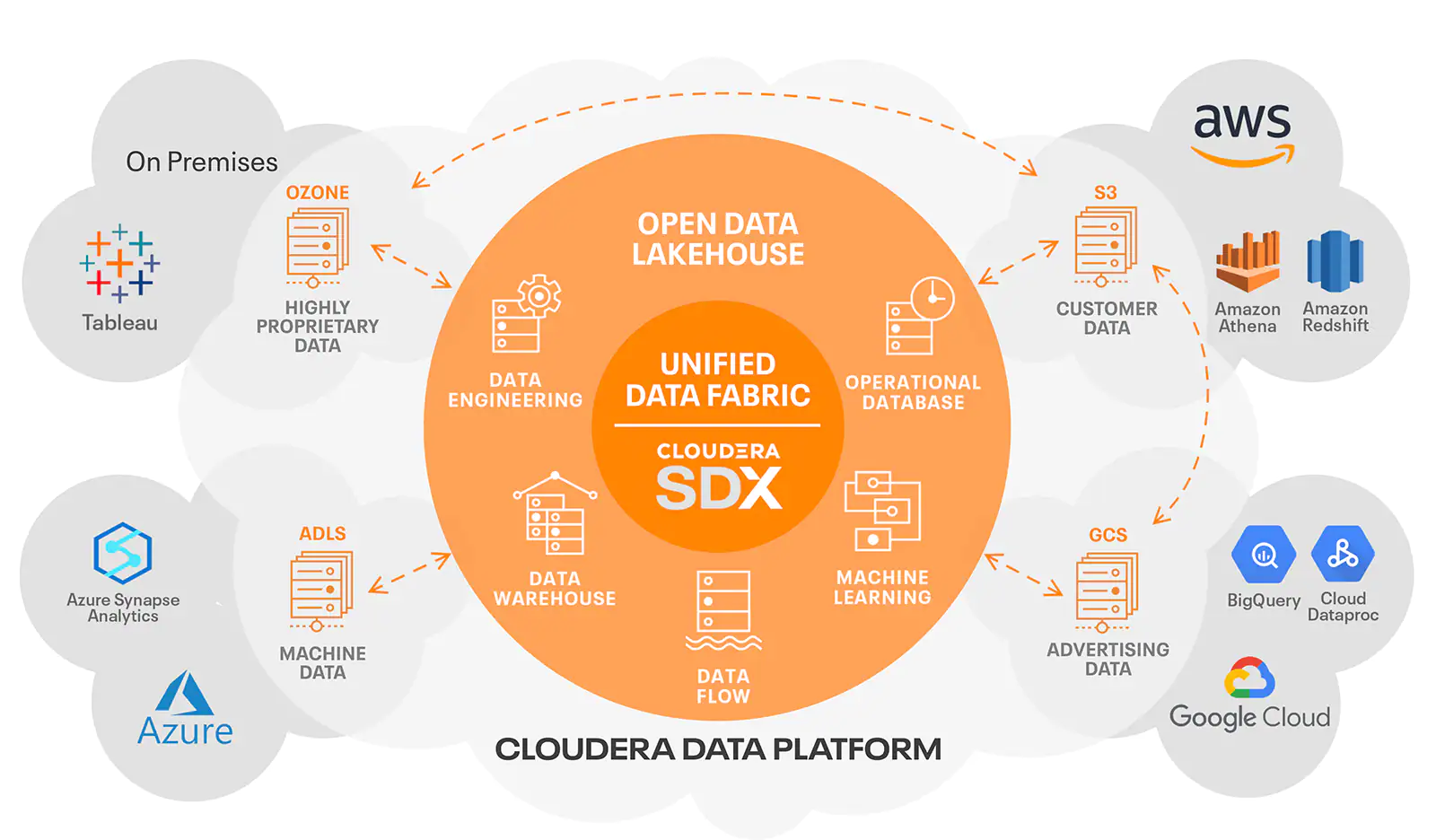

Source:https://www.cloudera.com/products/unified-data-fabric.html

Cloudera has established itself as the standard for big data in on-prem architectures, whilst also developing its robust offerings for public clouds (Azure, AWS, and GCP) in recent years. Consequently, Cloudera now provides diverse solutions including private cloud, public cloud, hybrid, and multi-cloud options, all evolving to become increasingly user-friendly. It’s also one of the vendors to have enthusiastically embraced the term data fabric, as we can see in the image above.

Cloudera’s appeal lies in its versatility, enabling functionality across various platforms with predominantly open-source tools, ensuring a rapid adoption of new technologies and a seamless integration with tools from other vendors.

Snowflake

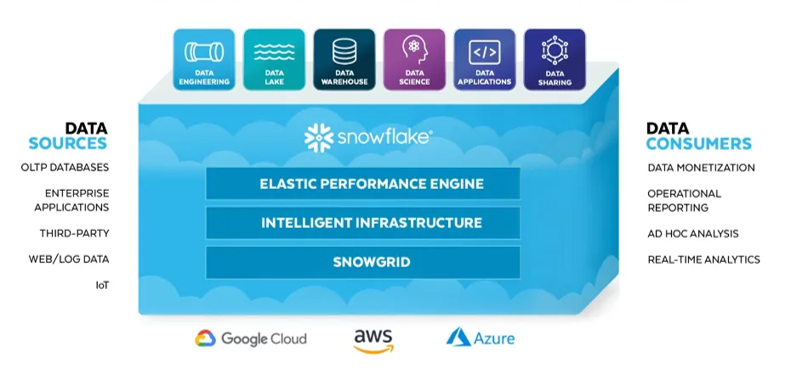

Snowflake, the youngest of the tech vendors mentioned here, benefits from not being constrained by traditional data technologies, positioning it as arguably the best option when it comes to fully leveraging cloud benefits and modern architectures. Its Elastic Performance Engine enables Snowflake to scale both storage and compute power easily and transparently, whilst the Intelligent Infrastructure ensures maximum use of Snowflake with minimal administrative effort. What’s more, with SnowGrid, Snowflake can operate across various cloud providers and regions in parallel.

Due to its dual functionality as a cloud data lake and data warehouse, Snowflake must be integrated with other solutions within the context of a data fabric. For example, we might want to use an ETL tool such as dbt, Matillion or Fivetran that can complement Snowflake for data ingestion, orchestration and data governance; we might want to use Power BI as the data visualisation layer. It’s important to note that Snowflake is evolving rapidly, and new features are being added, especially in the areas of AI and LLM.

What we particularly appreciate about Snowflake is its user-friendly design and reliable performance.

Databricks

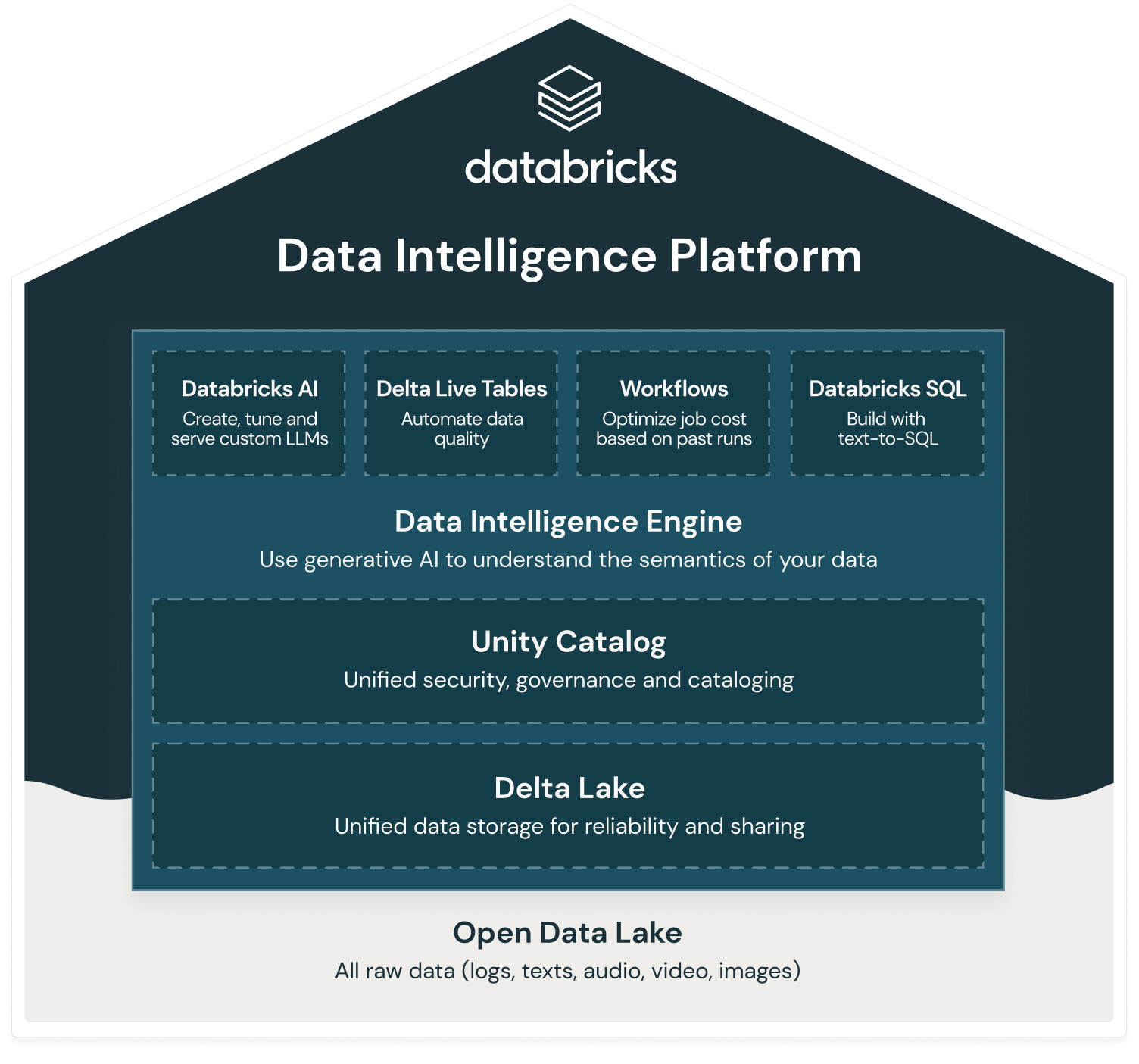

Source: Source https://www.databricks.com/blog/what-is-a-data-intelligence-platform

Databricks was created by the founders of Spark and started as a platform for managing Spark clusters and running Jupyter-style notebooks. However, this has now evolved into a comprehensive data platform providing a unified, open analytics environment for building, deploying, sharing, and maintaining enterprise-grade data analytics and AI solutions at scale.

They also created Delta Lake, an optimised data storage with ACID transactions (also used by Microsoft Fabric). Recently, Databricks developed its own catalogue, Unity Catalog, to unify security, governance and cataloguing across various cloud platforms. The use of AI models to deeply understand the semantics of enterprise data, automatically analysing data and metadata and how it is used (in queries, reports, lineage, etc.) adds even more new capabilities. Databricks also boasts powerful tools such as mlflow for MLOps and Delta Live Tables for batch and streaming processing, featuring automated data quality checks and automated workflows, simplifying orchestration.

Note that Databricks refers to its offering as a «Data Intelligence Platform,» as seen in the image above. This platform’s scope, whilst comprehensive, represents only a part of what is typically required for a data fabric or an enterprise data platform. Consequently, like Snowflake, it is advisable to supplement Databricks with other technologies for certain functionalities, like Power BI as the data visualisation layer (even though Databricks has good data exploration capabilities, it does not excel as a BI tool).

What we particularly admire about Databricks is its relentless drive for innovation and the successful transformation of «plain Spark» into a fully-fledged data platform that successfully encompasses much of what we expect in a cloud data platform.

Conclusions

In our first blog post in this mini-series, we discussed the evolution of data platforms from the early data warehouses to the data fabrics or enterprise data platforms that tech vendors and data experts are talking about nowadays. We also defined a set of twenty aspects that a modern data platform must be able to cover from a technical point of view. In this second and final part, we have introduced what we believe are some top tech vendors to get you there, focusing on the ones that can do it on cloud.

We hope that this mini-series has helped you if you are at the point of choosing your next data platform technology stack. Please feel free to reach out to our team of experts if you need further advice, or are interested in our tailored data platform assessment where your unique requirements are analysed in order to give you the best recommendations. We’re just a click away!