22 Jun 2017 DATA QUALITY WITH INFORMATICA – PART 2: DATA STANDARDIZATION

Introduction

In the previous article in this series about Data Quality we explained how we can profile our data using Informatica. We learned that the data in our example contained some columns that needed to be fixed:

| • | Keyword_ID: our data contain records with value ‘–‘ in this field, which represents a null value; in order to standardize this value across all sources we are going to change it to ‘NA’. |

| • | Currency: the currency field is also not consistent as the values are in both upper and lowercase, and some records contain values that do not correspond to a currency code. We are going to fix this by standardizing to uppercase. |

| • | Year: some records contain the year with the word ‘year’, e.g. ‘year 2015’, instead of just the value 2015; the field must be standardized to just the year in numerical format. |

| • | Quarter: the quarter field is also wrong, as some records contain the date or the year, and this field should only contain the quarters and the year number. |

In this article, we are going to continue with this example and create a set of mapplets in Informatica Developer to fix these data issues.

1. Creating the standardization rules

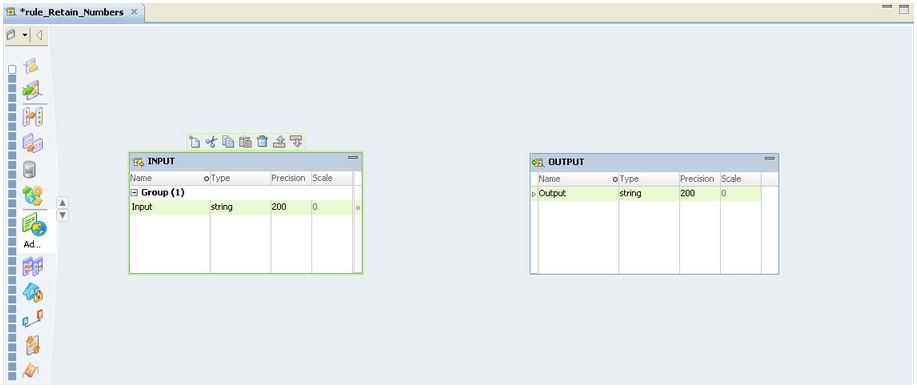

Our first mapplet will retain numerical values only, so it can beapplied to any column where we need to keep only numerical values. In our case, we are going to apply it to the year column, and to do this, we open Informatica Developer, right-click on the project and click on create mapplet; we’ll call it rule_Retain_Numbers. A mapplet normally contains an input and an output transformation, as it receives values and returns results. We are going to define the mapplet logic between these two transformations, so first we add the input and the output transformations, configure the input and output ports in the transformations and set the length to be long enough, for instance 200 characters.

|

Figure 1: Creating a mapplet in Informatica Developer |

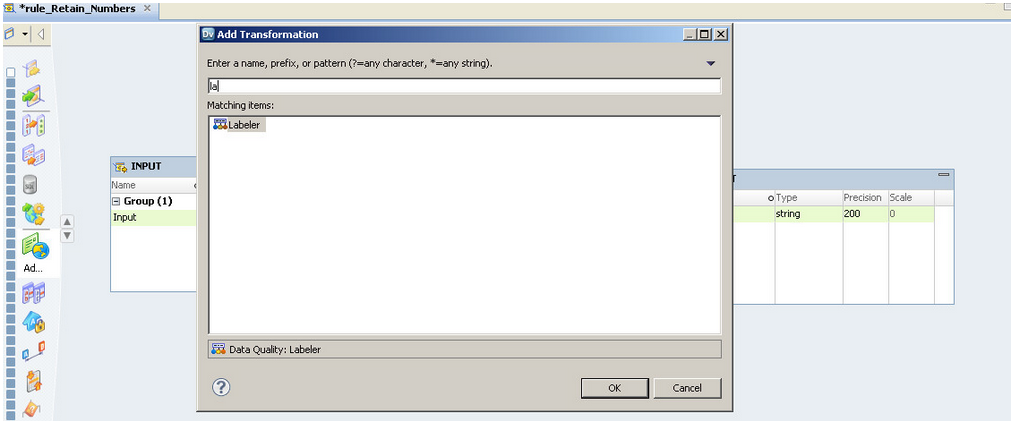

Now we have to define the mapplet logic: first, we are going to use a labeller transformation to mask the letters and spaces; the labeller can be used to set a character by character analysis of data in a field, where each character is masked according to a list of standard and user-defined types: numbers will be masked as ‘9’, spaces as ‘_’ , letters as ‘X’ and symbols as ‘S’. To add the transformation, we right-click inside the mapplet, select Add transformation and then add a labeller:

|

Figure 2: Adding a labeller transformation to the mapplet |

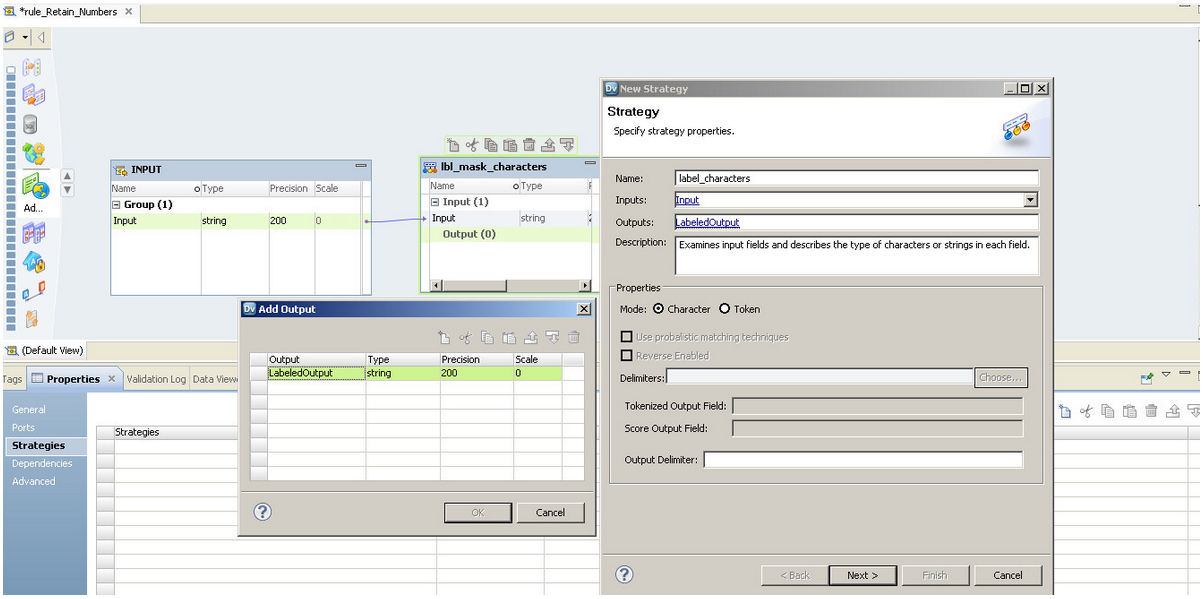

Now we’re going to add a new strategy to the labeller: select character mode, then verify that the output precision is set to 200 as in the input:

|

Figure 3: Basic labeller confirguration |

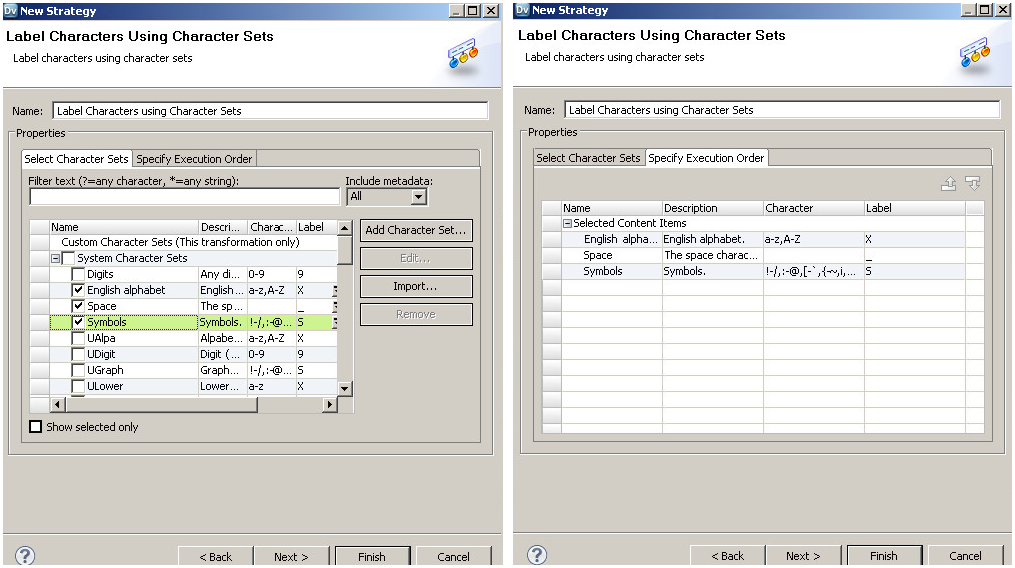

The next step is to add an operation: we’re going to select Label characters using character sets instead of Label characters using reference table. We want to mask all the characters except the numbers, so we choose the English alphabet, spaces and symbols, as in the image below:

|

Figure 4: Configuration of the labeller transformation |

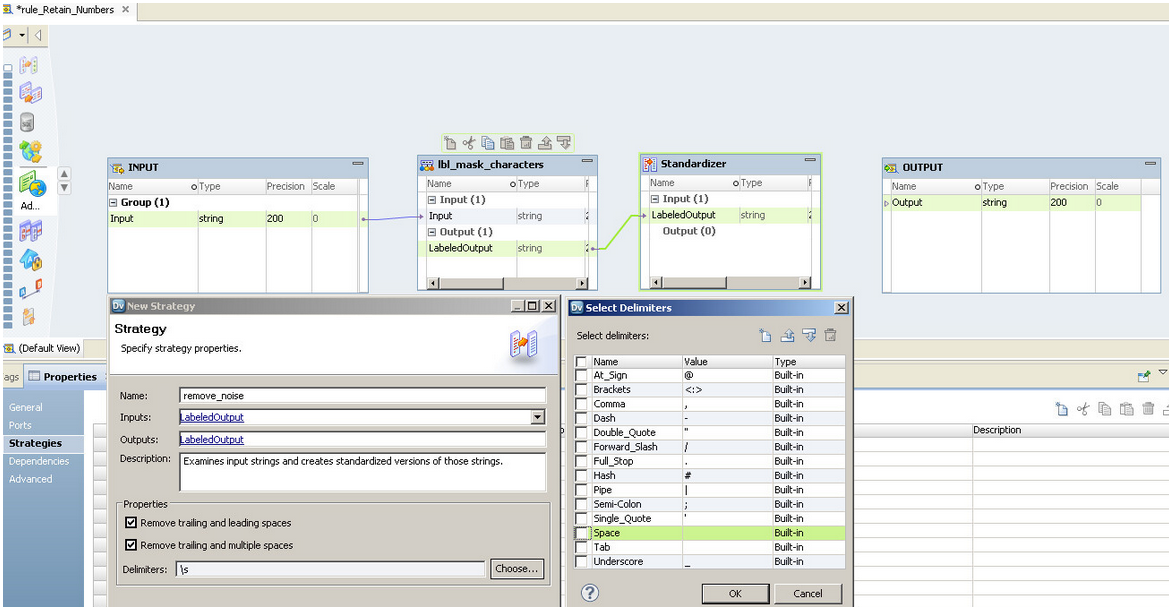

Click on finish and skip the ignore text dialog which appears after clicking on next, as we don’t want to add another operation. With the configuration as it is now, the labeller will output only the numbers and mask the rest of the characters, so we can add a standardizer transformation to remove them. The standardizer transformation can be viewed as a series of operations that are carried out on an input string to look for matching substrings in the input and to place the correct substring in the output. It is used to fix errors such as incorrect formats, and to search for values in the data that can be removed or replaced either with reference table items or specific values. To continue with our example, it’s time to add a standardizer transformation to the mapplet as we did before, which we can name st_remove_noise; drag the output from the labeller to the standardizer input, then create a new strategy (called remove noise). We check the space delimiter after clicking on the choose button, and also remove the trailing spaces by checking both checkboxes in the strategy properties.

|

Figure 5: Configuring the standardized transformation strategy |

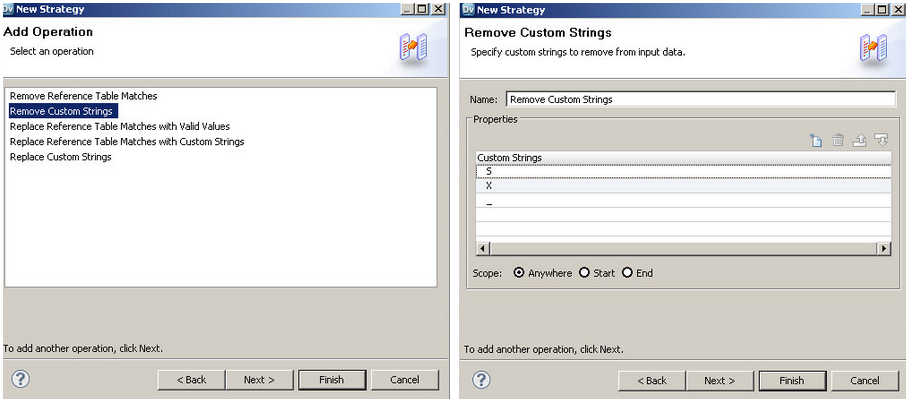

At this point we want to remove the noise labelled with ‘S’, ‘X’ and ‘_’, so we select remove custom strings in the strategy and add these characters to the custom strings in properties.

|

Figure 6: Configuring the standardizer transformation to remove custom strings |

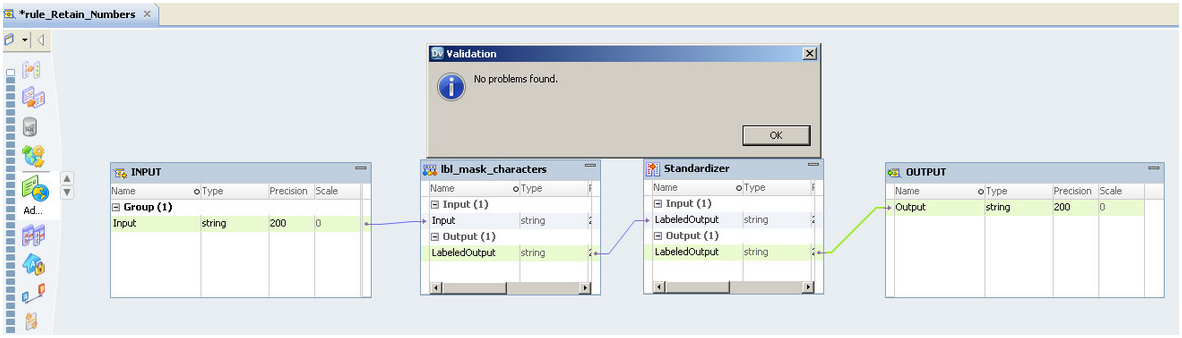

Click on finish and finally drag the output from the standardizer transformation to the port of the output transformation, then validate the mapplet. If we want the mapplet to be available in the Analyst, we have to validate it as a rule.

|

Figure 7: Standardization mapplet |

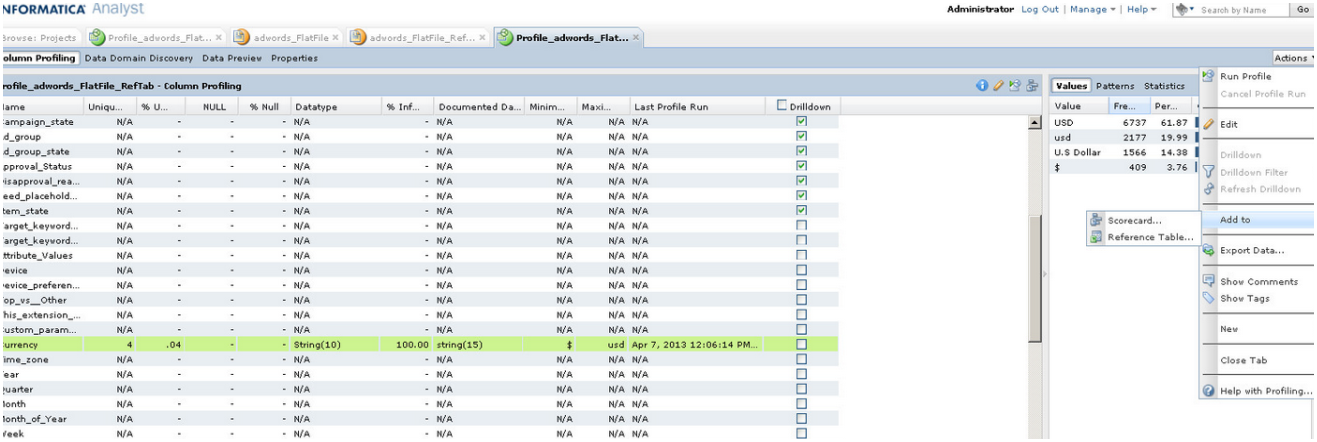

Carrying on with our example, now we’re going to create another mapplet to replace the wrong currency codes we found in the file. We’re going to use a reference table to do this, which can be created using Informatica Analyst or Developer. We will use Analyst for this example. Log into Analyst, open the profile, select the currency column and create a reference table. The first value will be the valid one and the rest of them will be replaced by the correct one. To create the reference table we have to go to the file profile, select the currency column and then, in actions, click on Add to – Reference Table:

|

Figure 8: Creating reference tables in Informatica Analyst |

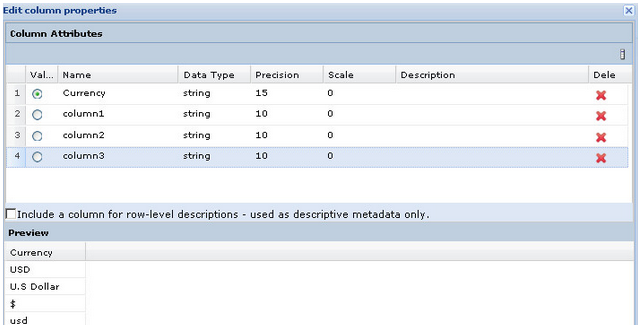

Once the table has been created we add three new columns with the values to be replaced, the first column being the correct one.

|

Figure 9: Reference table properties |

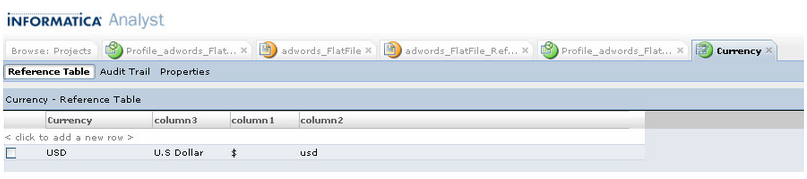

After adding the new columns, we can edit the records and keep just one, as shown in image 10:

|

Figure 10: Final reference table for currency standardization in Analyst |

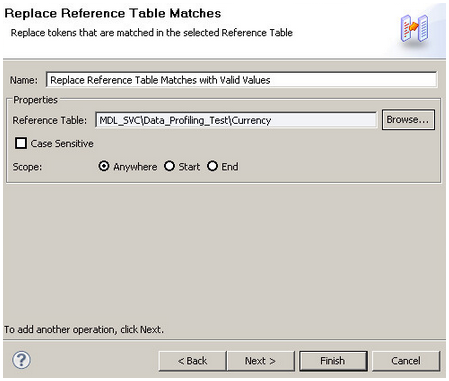

In order to keep each rule in a different mapplet, we need to create a different mapplet for this rule. We could add new ports to the mapplet and increase the complexity of the standardization, but by keeping each rule in a different mapplet, the mapplets remain as simple as possible. For the currency mapplet we proceed as with the first one we created above, but in this case the standardizer transformation will have a different strategy: to replace the values with those present in the currency reference table. To do this, we have to select the reference table replacement for the transformation:

|

Figure 11: Standardizer transformation using a reference table |

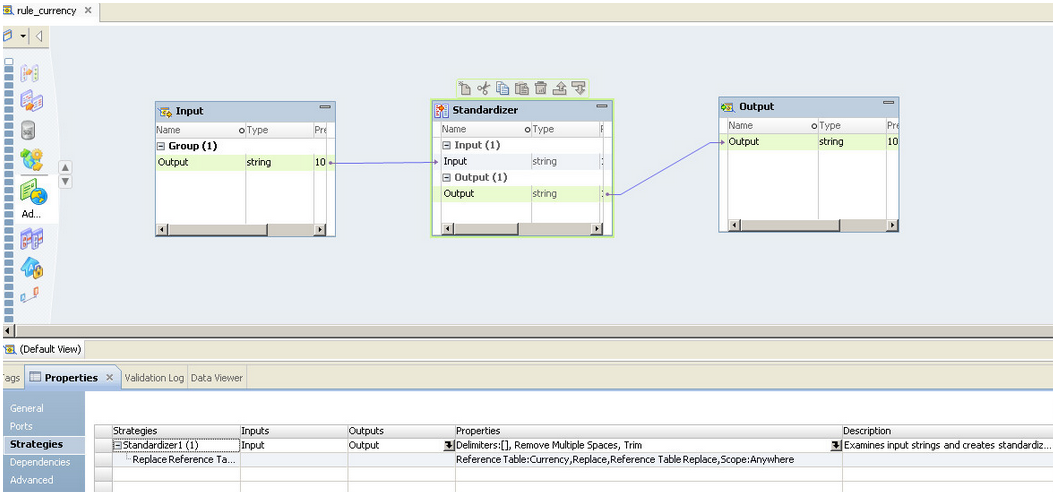

The mapplet will look like this; we validate it and proceed to create a new one:

|

Figure 12: Mapplet for the standardization of the currency field |

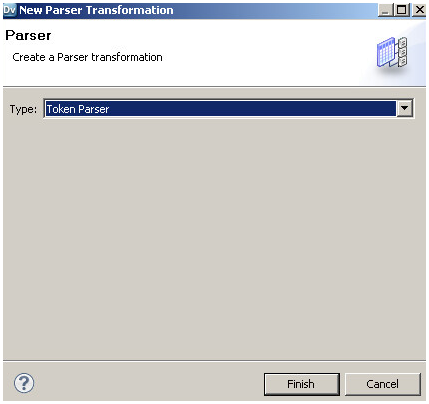

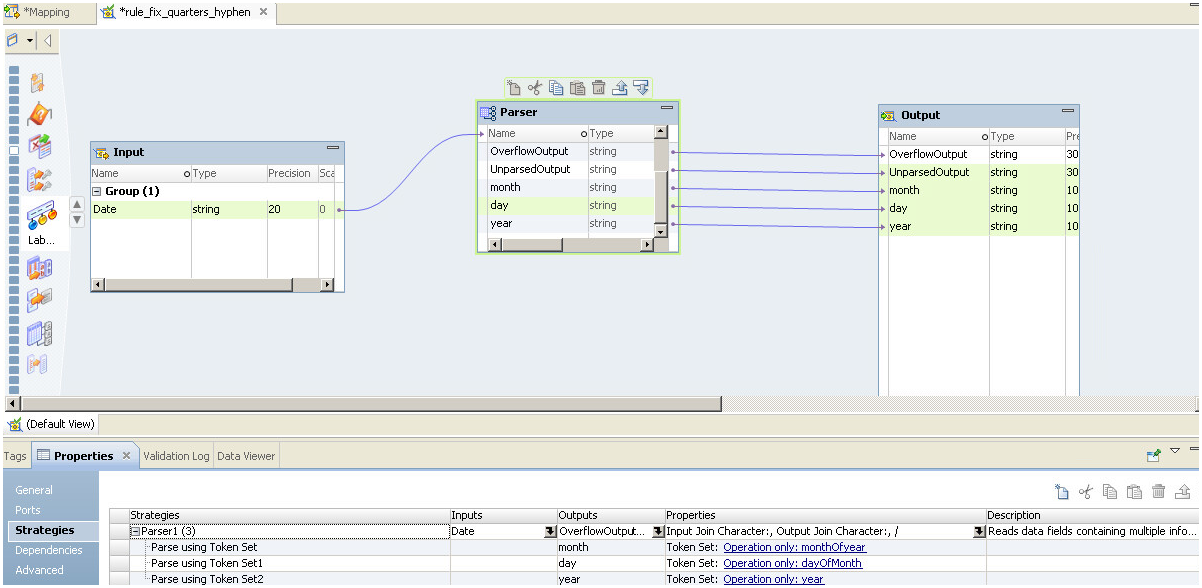

We need to identify the month number to replace the values for the quarter, so we will now proceed to parse the date in a new mapping. Informatica Data Quality comes with some in-built features to parse dates, but we are not going to use them in this example. Instead, we are going to parse the date manually, using a tokenizer to split it into three columns: day, month and year. Click on create a mapplet and add an input, an output, and a parser transformation. We will parse the date field using the slash character as the delimiter and use regular expressions to validate the day, month and year. It’s important to note that the parser transformation creates two output ports by default: one for data that do not meet the parser regular expression, whilst the other is the overflow port that contains data if there are not enough output ports to keep the correctly parsed values. In the parser transformation, select the token parser when prompted:

|

Figure 13: Configuration of the parser type in the parser transformation |

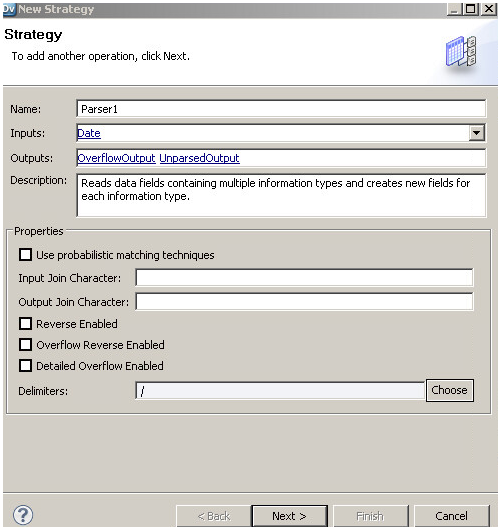

Name the port in the input as date, then drag and drop the date from the input transformation to the token parser; then go to the parser transformation and add one strategy with three outputs, day, month and year. Each of these ports will have a custom regular expression with “/” as the delimiter.

|

Figure 14: Parser configuration |

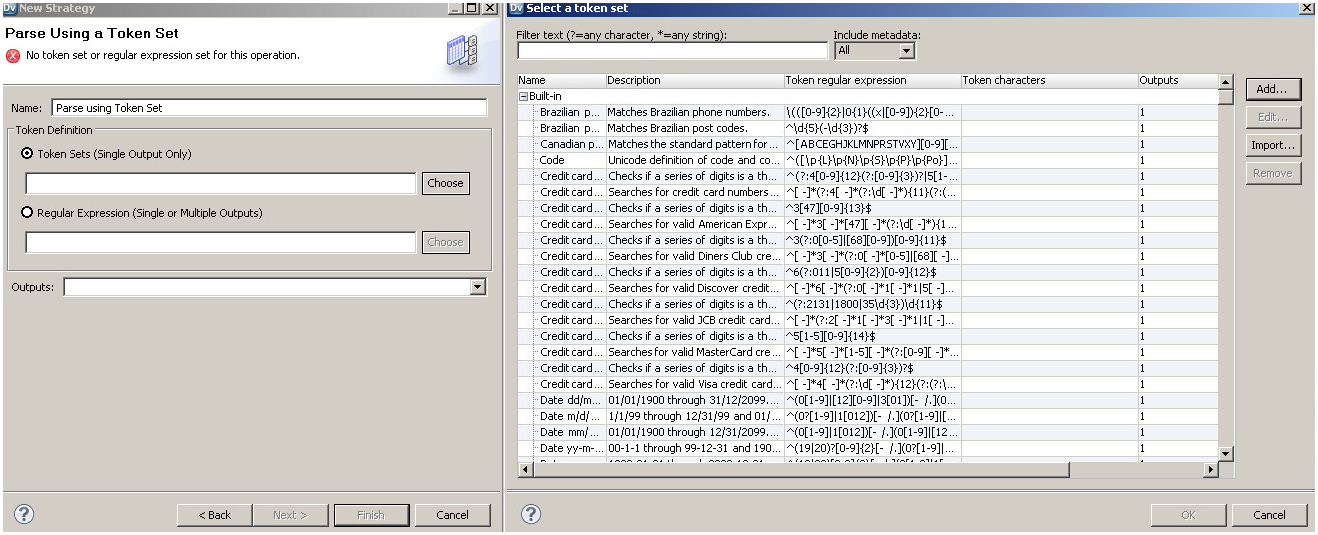

Click on next and select token parser, and then select token sets in the token definition and click on choose. In the token set selection, we create a new expression for every output port of the parser transformation:

|

Figure 15: Configuration of token sets for parsing data |

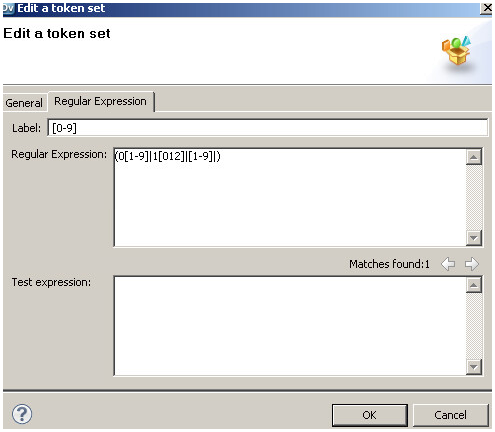

We add the monthOfYear custom token set with the regular expression shown in image 16:

|

Figure 16: Token set configuration for the month number |

Once the token set has been added, assign it to the month column. We have to repeat the same process with the proper regular expressions for each column, and once all the columns have been assigned, the parser mapplet should look like this in image 17:

|

Figure 17: Mapplet for parsing the date into day, month, and year columns using a parser transformation |

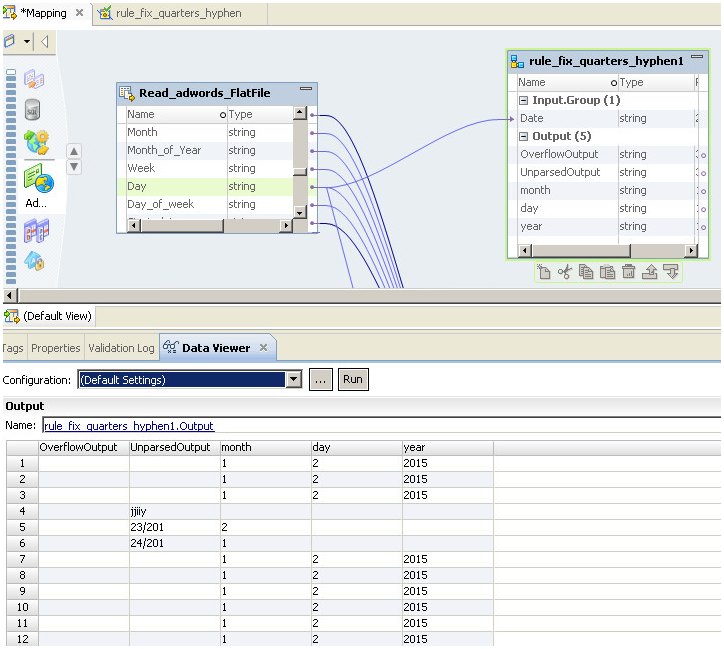

We can now add the mapplet to the mapping to get a preview of the results:

|

Figure 18: Results preview of the parsing mapplet |

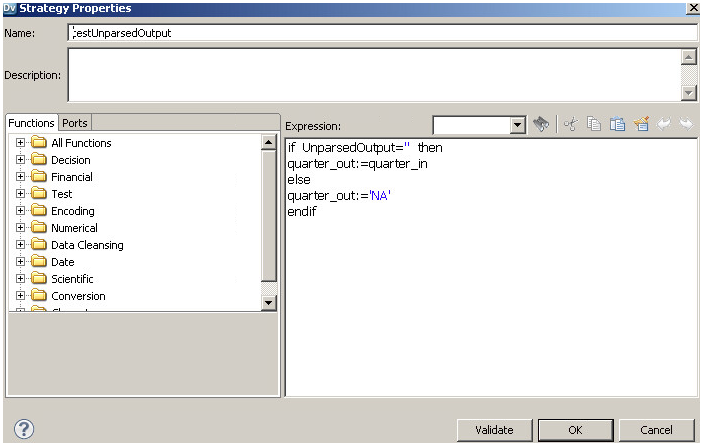

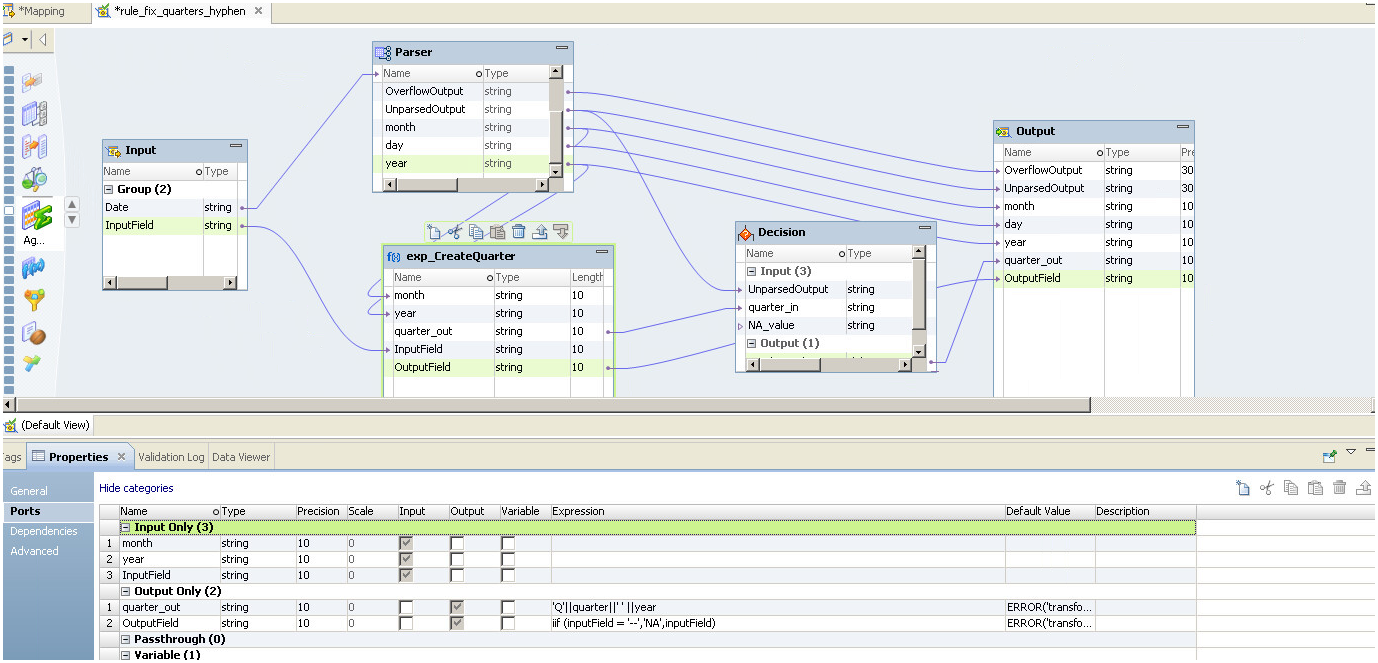

We can see that there are some records that do not meet the regular expressions we set in the parser, so we have to set a default value for those records that have populated the UnparsedOutput port. Continuing with the mapplet, we are going to add the port quarter to the output, and replace the hyphens with the string “NA”. In order to do this, we need to add two expressions to the mapping, one to create the quarter column and the other to replace the hyphens with “NA”. We can do this by creating an expression with one port to concatenate the quarter with the year; in the same expression we add a port to replace the hyphens for “NA”, and then make a decision to populate (or not) the quarter output, depending on the unparsed port from the parser: if it is empty, then the date was parsed correctly and the quarter field will be populated; if not, the date was wrong, and the quarter will be populated with “NA”. The code in the decision strategy will look like this:

|

Figure 19: Expression to generate the quarter based on the result of the parsing of the date |

Our mapplet should look like image 20:

|

Figure 20: Standardization mapping with quarter parsing |

2. Creation of the standardization mapping

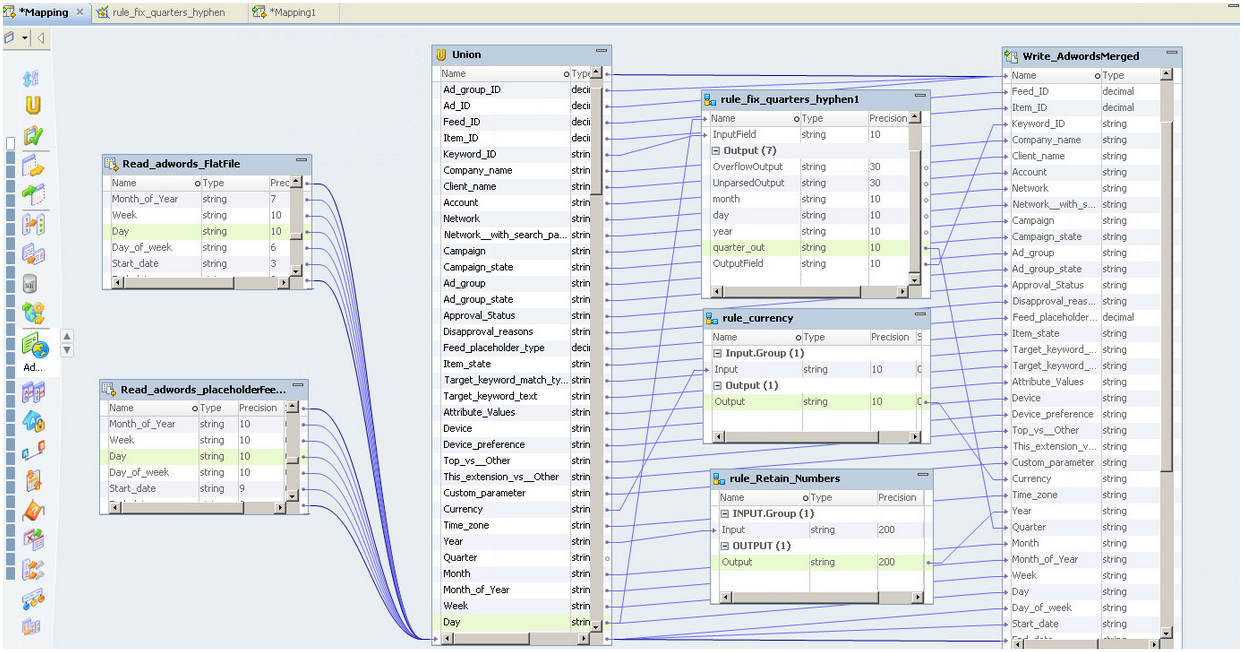

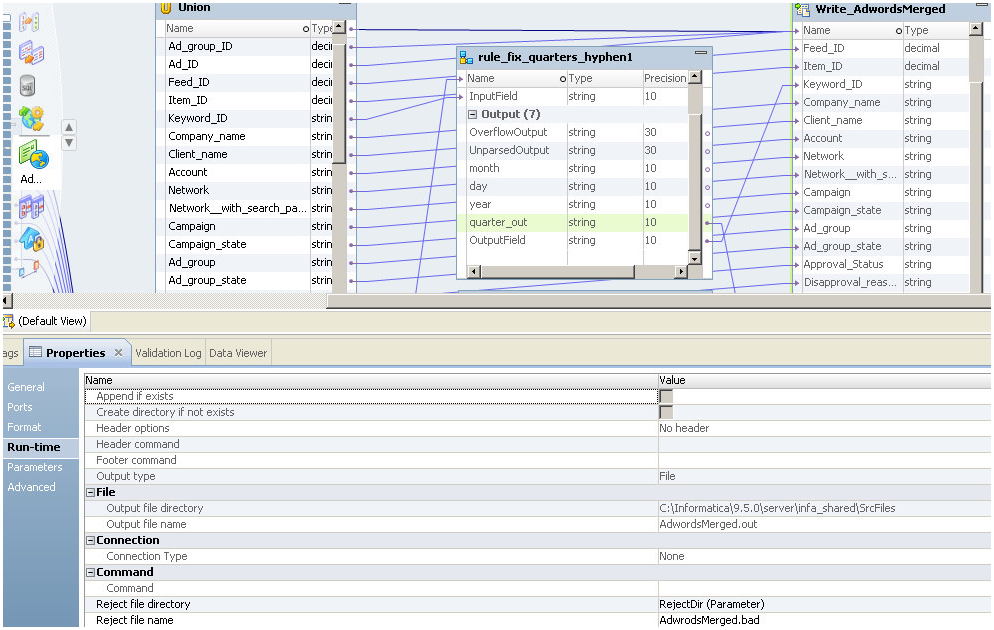

Now we can validate all the mapplets and add them to a mapping where we can also add the source file and a new output file with the same structure. This file will be the standardized data file. We are also going to add a union to merge the data from two different dates. The mapping should look like the one in image 21:

|

Figure 21: Final standardization mapping |

After running the mapping, we can profile the generated file and check that it is meeting the rules that we defined at the beginning. We can see the path of the output file in the Run-time tab of the properties of the target:

|

Figure 22: Properties of the output transformation. We can see the name and path of the output generated in the Run-time tab |

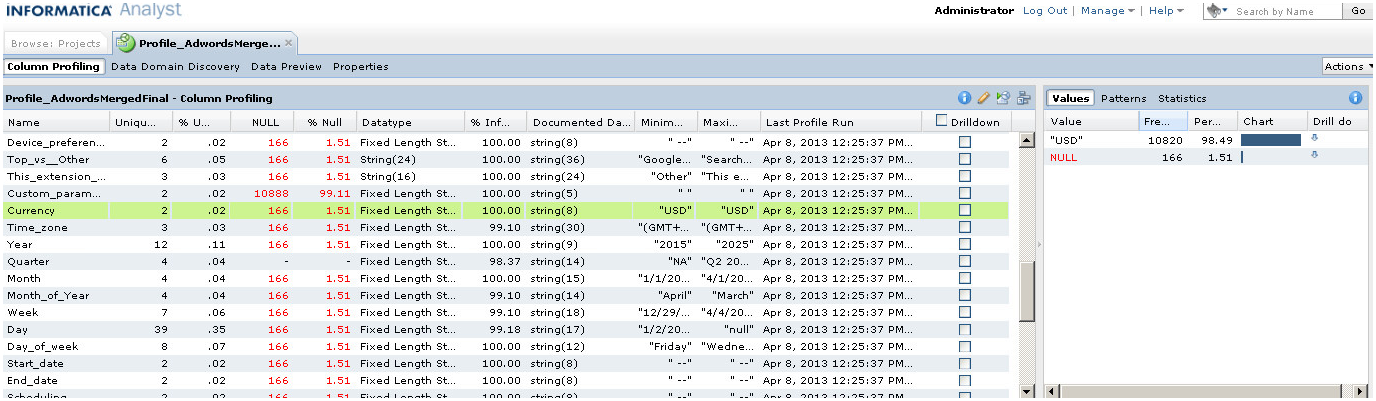

3. Results

Now we are ready to review the profile of the output file. For the currency column, we can see that the only value available is USD. If any other value appears, we can simply add it to a new column in the reference table. Notice that NULL values are appearing as we didn’t set a rule to standardize the NULL values to “NA”.

|

Figure 23: Results of the standardization process for the currency column |

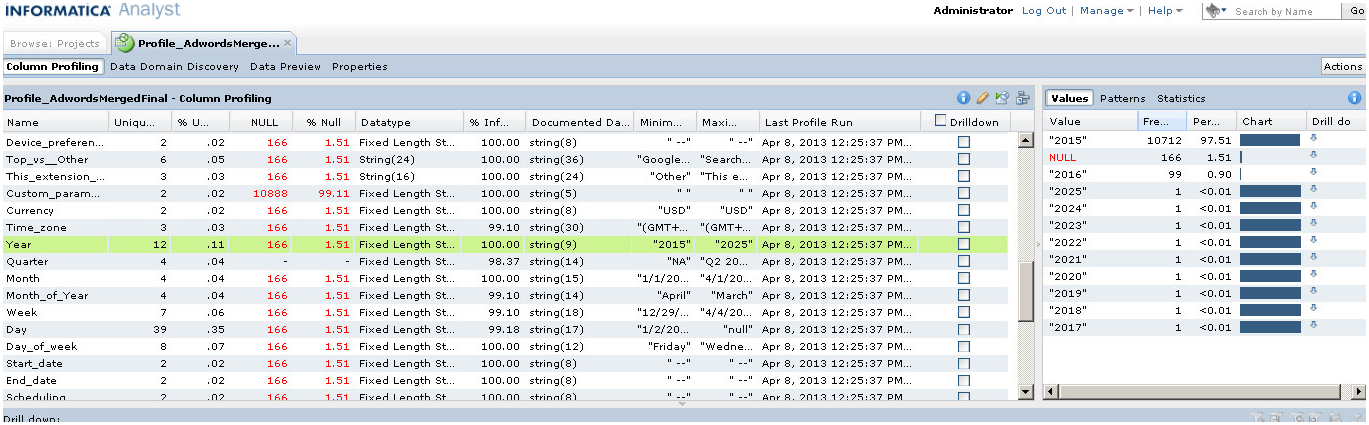

The year column is now standardized in the merged file and we have only numerical values after the data masking and standardization:

|

Figure 24: Results of the standardization process for the year column |

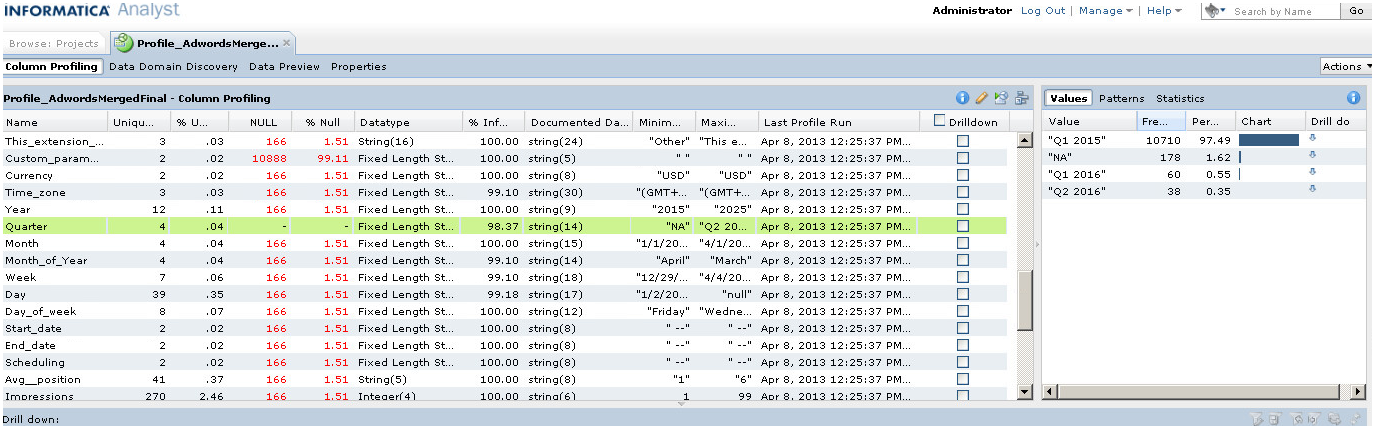

We have fixed the quarter column to obtain standard values (quarterName Year) thanks to the expressions added to the mapplet:

|

Figure 25: Results of the standardization process for the quarter column |

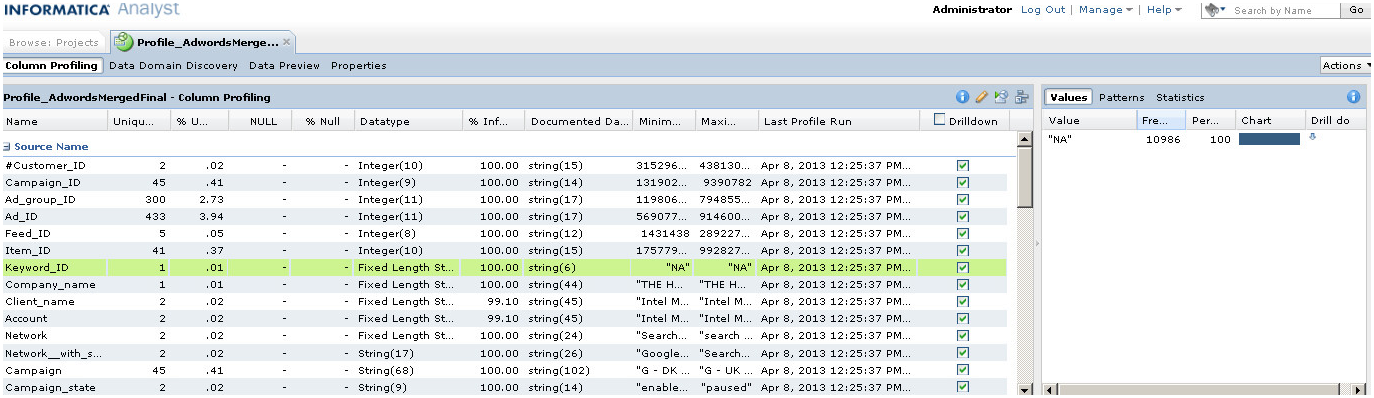

We have also fixed the hyphens in the keywordID column:

|

Figure 26: Results of the standardization process for the Keyword ID column |

Conclusion

This concludes this article about Data Standardization with Informatica Data Quality. We have seen that Informatica has a lot of useful features to standardize data, and that it is a very user-friendly tool whilst still offering enough flexibility to perform complex standardization tasks. Stay tuned for the last article in this series, where we are going to explain Data Deduplication using Informatica Data Quality. If you would like to know more about the Data Quality Services we offer click here!