03 Mar 2017 DATA QUALITY WITH EDQ – PART 3: DATA DEDUPLICATION

Introduction

The first step in a data deduplication or consolidation process flow is record matching, the process of finding duplicate records in the data, that is, records which may relate to a single real-world entity. This is not a trivial task, since we need to identify duplicate records despite different formats and conventions, typos, missing data, etc.. EDQ comes with some built-in transformations to match records; their names are self-explanatory in most cases:

| • | Advanced Match: gives control over all the configuration options |

| • | Match Entities |

| • | Match Households |

| • | Match Individuals (Name, Address, DoB) |

| • | Match Individuals (Name, Address) |

There are also processors for a variety of different matching scenarios:

| • | Consolidate: matches and merges multiple datasets |

| • | Deduplicate: matches a single dataset |

| • | Enhance: adds information from one or more reference datasets |

| • | Group & Merge: gives a simple merge based on exact match only |

| • | Link: find matches between datasets but without merging the data |

The matching processors execute a series of ordered sub-processors where we can configure the logic to be used in the matching and the decision rules for candidate duplicate records, among other things. The sub-processors are:

| • | Input: select the attributes from the data stream included in the matching process |

| • | Identify: create identifiers to use in matching, i.e., what data will be used to identify records, and map the identifiers to attributes |

| • | Cluster: divide the data streams into clusters; this can be used to reduce the number of comparisons needed and thus the time necessary to run the process |

| • | Match: choose which comparisons to perform, and how to interpret them with match rules |

| • | Merge: use rules to merge matching records, to create a ‘best’ set of output records (optional) |

In the following section we are going to run through an example of data deduplication using EDQ.

1. Deduplicating Data using EDQ

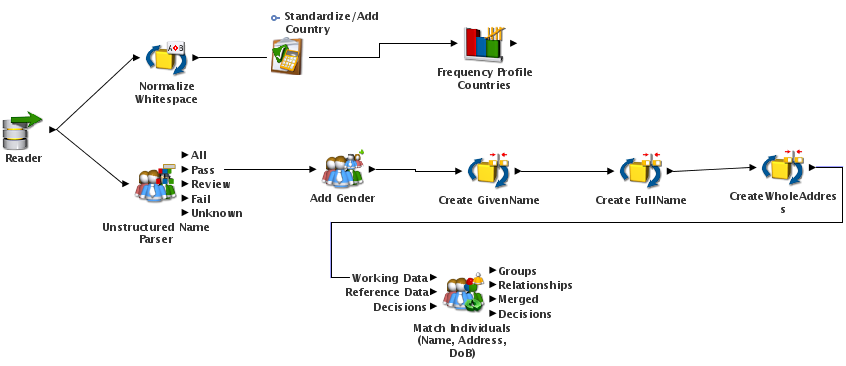

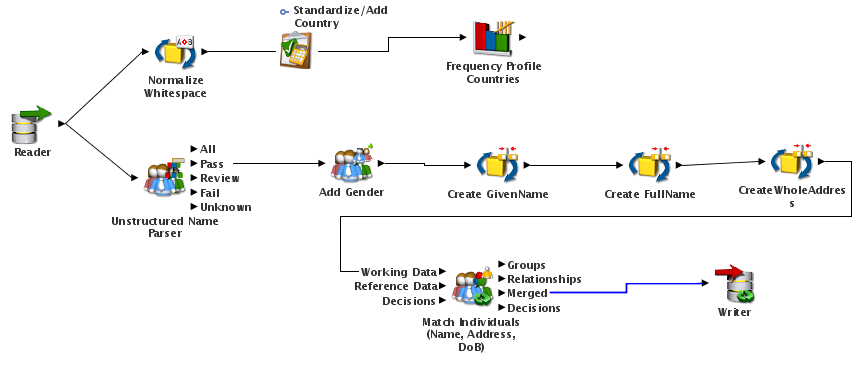

Let’s imagine that we have a dataset with a list of people, with many fields containing personal information, including full name, date of birth, and address. We want to see if we have duplicate persons in the table, and to do this, we are going to use EDQ to find matching records based on the full name, address, and date of birth. For this specific case, the best processor to use is Match Individuals (Name, Address, DoB). We’re going to add this processor to a new process, connecting all the fields available in the dataset as identifiers:

|

Figure 1: Process for data standardization and deduplication in EDQ. The steps Create GivenName, Create FullName, and Create WholeAddress are concatenations of strings to form the full name in a single string and the whole address in another string. |

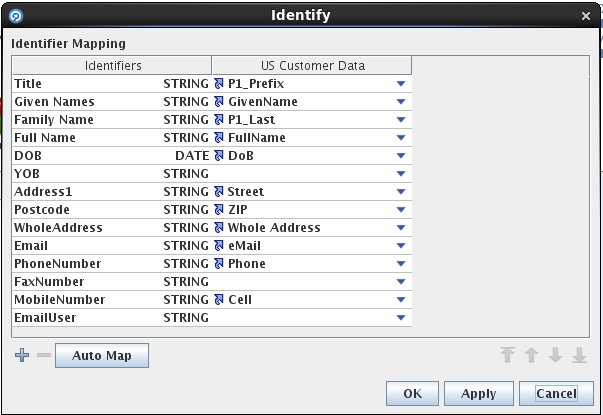

|

Figure 2: Matching identifiers of the Match Individuals processor in EDQ. |

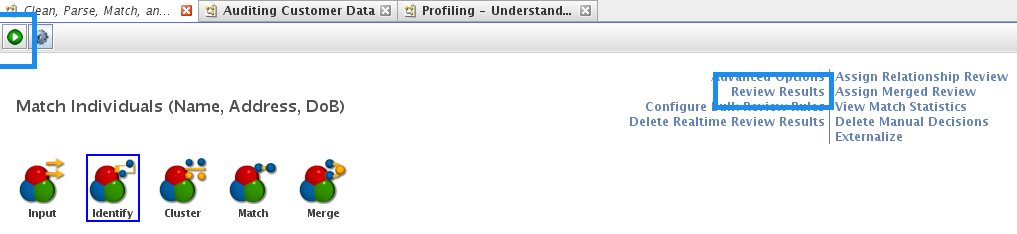

We can run the process with this configuration to review the results:

|

Figure 3: Running the Match Individuals processor in EDQ. |

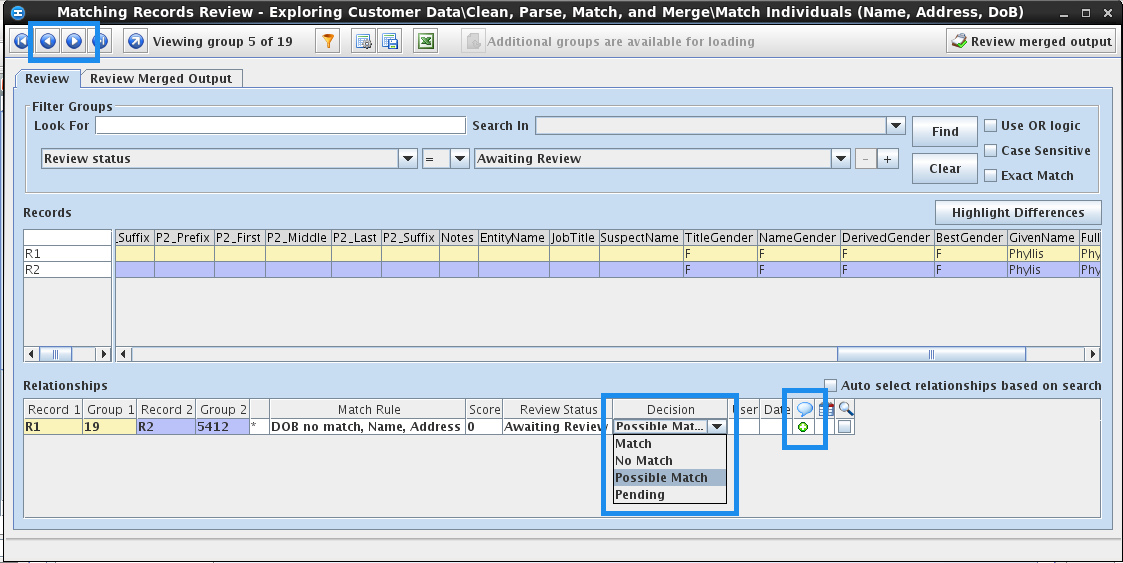

In the new window that opens we can review the matching records, set decisions for actions for each individual case, and even add comments:

|

Figure 4: Results of the Matching Individuals processor in EDQ. We can review and set a decision for each pair of duplicate candidates individually. |

These results can also be exported to Excel for further investigation. Now we’re going to merge the duplicated records using the Merge sub-processor of the Match Individuals processor; we simply double-click on the Merge sub-processor and check the option ‘Output unrelated records’. We can write the deduplicated records into a new dataset; to do so, we have to add a new Write processor connected to the Merged output of the Match Individuals processor:

|

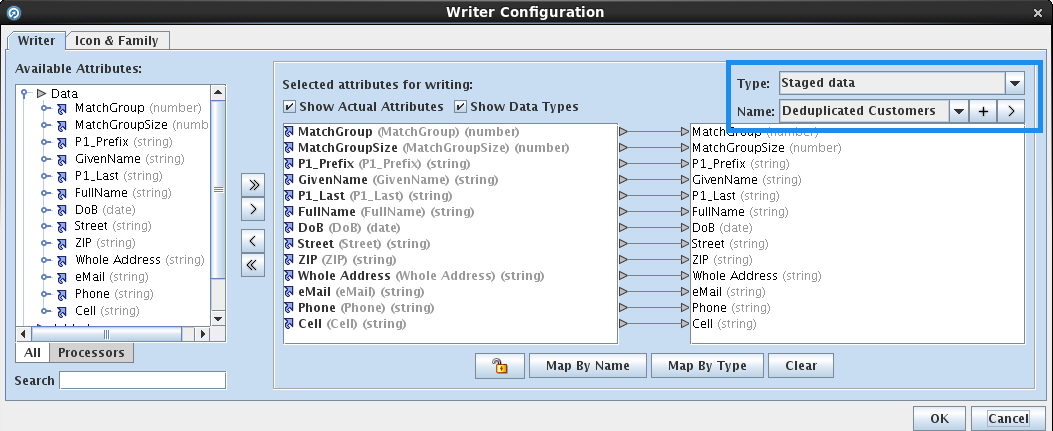

Figure 5: The data standardization and deduplication process in EDQ, with a Writer processor to export the results to a new dataset. |

The records will be written into a new staged dataset called Deduplicated Customers:

|

Figure 6: Writing the results of the process into a new dataset in EDQ. |

|

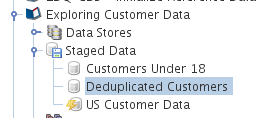

Figure 7: New staged data created from the results of the process of deduplication in EDQ. |

After running the process, we can see that the new dataset with the deduplicated customers has been created in Staged Data:

|

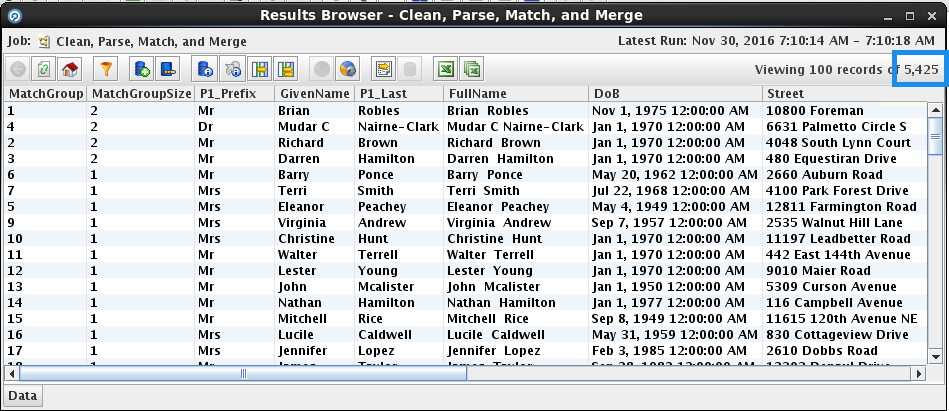

Figure 8: Dataset created from the standardization and deduplication process in EDQ. |

And if we right-click on the Writer processor and select ‘Show results in new window’, we can see that some of the records have a MatchGroupSize field equal to 2, which means that these records come from the merge of two records.

Conclusion

Data deduplication is a complex task, one of the most complex manual tasks in the quality assurance process, where tools like EDQ are extremely handy. With EDQ we were able to find matching records using complex rules and to merge the records belonging to a single entity, all in a few clicks. The ability to review each pair of duplicate candidates individually and to decide case-by-case proved to be really helpful in reducing the risk of merging records corresponding to different real-world entities in the deduplication process. You can try this and other examples of EDQ usage by downloading the latest virtual machine from Oracle, available here. This concludes our series of blog articles about Oracle EDQ. You can find the previous posts in the links below:

Data quality with EDQ

Part 1: Data Profiling

Part 2: Data Standardization

Have you tried EDQ in any of your projects? What was your experience using EDQ like? If you have anything you’d like to share, or if there are any questions you’d like to raise, please leave your comments below! In the next couple of blog posts we’ll explain how to profile, standardize and deduplicate data with Informatica. Stay tuned! Click here if you would like to know more about the Data Quality Services we offer!