25 Jul 2024 Custom KNIME Auditing Solution – V2

In production data environments, auditing is key to track user executions and accesses, especially in highly secure platforms where you need to know who is executing what, when, and how.

In the context of a KNIME Server deployment for a customer with strict security standards, we developed a solution for auditing KNIME a few years ago, which we can now tag as KNIME Auditing Version 1. In this blog post, we are going to explain KNIME Auditing Version 2, an updated solution that has replaced and improved the previous approach.

KNIME Auditing Version 1 – The Previous Solution with Open-Source KNIME Plugins

KNIME Auditing Version 1 is fully explained in the blog post Adding Auditing Capabilities to KNIME with open-source plugins. In short, this approach consisted of adding two open-source KNIME plugins to each KNIME Executor VM to extend the KNIME Executor logging capabilities and to send audit events as XMLs to an ActiveMQ (AMQ) queue. For each executed node in a KNIME job, four audit events were sent to detail the following: when the node started, its execution status on finishing, its dependencies on other nodes, and the parameters used for execution.

The decision to develop two KNIME plugins, rather than a Python script to process the KNIME log file, was driven by three key reasons, shown below and elaborated on in the above-mentioned blog post:

- The information available in the log is limited. The first plugin (Logging Extended) solved this.

- It requires a parsing tool that runs in the background and parses the log file into audit events that are sent to the audit system. The second plugin (AMQ Logger) processed the log lines and sent the audit events directly to the JVM, even before the log lines were written to the log file, thus eliminating the need to parse the log file.

- The log file is owned by the user or service account running KNIME. In the KNIME Executor, the job owner also owns the logs, making them susceptible to changes and unreliable for auditing. The combination of the two plugins meant that we no longer needed to parse the log, so it did not matter if it could be tampered with.

While that solution worked and initially satisfied the customer’s needs, we encountered a volume problem while handling the events sent to the audit system. Sending four events for each node basically meant that complex workflows or workflows containing loops were generating hundreds or even thousands of events, flooding the entire audit server with hard-to-track information.

KNIME Auditing Version 2 – Solving the Volume Problem & Simplifying the Approach

To solve the volume problem and simplify the solution, we have designed a new audit solution for KNIME Server. Rather than sending four audit events for each node executed in a KNIME job, the new solution sends just one audit event per job execution, drastically reducing the number of audit events sent. Moreover, for each executed job, instead of sending detailed information about the entire executed workflow to the audit system, we send only basic job information in the audit event.

More extended information is stored in an audit folder on the network share used for the KNIME workflow repository. This folder includes the workflow and job execution details, as well as the workflow configuration files in KNWF format. The audit folder is only accessible to admins and auditors.

This new approach not only alleviates the audit server load by sending only job information (and a link to the audit folder for more details if needed), but also allows administrators and auditors to recreate the executed workflow in the KNIME UI with the exact same configuration, thanks to the KNWF file in the audit folder. This is possible even if the user has deleted the job or the workflow in the KNIME Server repository.

Note that we have implemented this solution with Python, which in principle contradicts the three reasons that justified our previous solution, listed above. However, there is an explanation for each point:

- With reference to point 1 from above, we developed the previous solution three years ago, and since then KNIME has added more features. One of these features is the KNIME Server REST API which can be used to extract job and workflow information (including downloading a copy of the workflow), so there is no need for extra plugins to add more information to the logs.

- Point 2 remains valid, as developing some Python logic to tail the log file and send the audit event was necessary. However, our customer realised that a Python application is easier to maintain than two custom KNIME plugins written in Java.

- Regarding point 3, in our new approach we will use the Tomcat server log, which is not accessible to the KNIME executor, and therefore completely inaccessible to users, ensuring that no tampering can be done to the log file.

In the following section we’ll explain in more detail how this Python solution was developed to extract the job ID from the Tomcat logs, generate the audit folder with the workflow configuration, and then send the audit event.

A Deeper Dive into KNIME Auditing Version 2

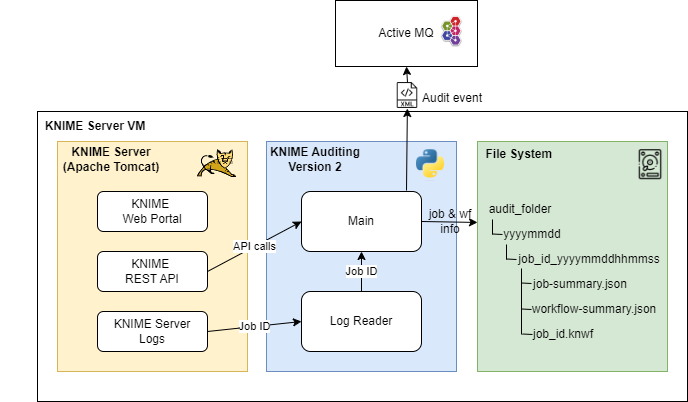

Figure 1: KNIME Auditing Version 2

KNIME Auditing Version 2 is a multithreaded Python application. One thread, the Log Reader, reads the KNIME Server logs to extract the job ID from finished jobs. It reads the new lines of the current daily log file and searches for lines containing the strings

EXECUTION_FINISHED or EXECUTION_FAILED

to identify the end of the job execution, which includes the job ID. This job ID is then sent to a thread-safe FIFO queue to be retrieved by the main thread, which is responsible for generating the backup and sending the audit event:

25-Apr-2024 16:58:53.721 INFO [https-jsse-nio2-8443-exec-23] com.knime.enterprise.server.jobs.WorkflowJobManagerImpl.loadWorkflow Loading workflow '/TeamA/TestWorkflow' for user 'victor'

25-Apr-2024 16:58:53.727 INFO [https-jsse-nio2-8443-exec-23] com.knime.enterprise.server.executor.msgq.RabbitMQExecutorImpl.loadWorkflow Loading workflow '/TeamA/TestWorkflow (TestWorkflow 2024-04-25 16.58.53; f9ff25f9-6ac8-489b-ad8e-73c421f8b119)' via executor group 'knime-jobs'

25-Apr-2024 16:59:00.351 INFO [https-jsse-nio2-8443-exec-17] com.knime.enterprise.server.executor.msgq.RabbitMQExecutorImpl.resetWorkflow Resetting workflow of job '/TeamA/TestWorkflow (TestWorkflow 2024-04-25 16.58.53; f9ff25f9-6ac8-489b-ad8e-73c421f8b119)' via executor group 'knime-jobs', executor '01f57cec-85de-496f-81d2-b24548e8f47e@node003.clearpeaks.com'

25-Apr-2024 16:59:00.352 INFO [https-jsse-nio2-8443-exec-17] com.knime.enterprise.server.jobs.WorkflowJobManagerImpl.execute Executing job '/TeamA/TestWorkflow (TestWorkflow 2024-04-25 16.58.53; f9ff25f9-6ac8-489b-ad8e-73c421f8b119)' (UUID f9ff25f9-6ac8-489b-ad8e-73c421f8b119)

25-Apr-2024 16:59:00.352 INFO [https-jsse-nio2-8443-exec-17] com.knime.enterprise.server.executor.msgq.RabbitMQExecutorImpl.execute Executing job '/TeamA/TestWorkflow (TestWorkflow 2024-04-25 16.58.53; f9ff25f9-6ac8-489b-ad8e-73c421f8b119)' via executor group 'knime-jobs', executor '01f57cec-85de-496f-81d2-b24548e8f47e@node003.clearpeaks.com'

25-Apr-2024 16:59:00.390 INFO [Distributed executor message handler for Envelope(deliveryTag=740, redeliver=false, exchange=knime-executor2server, routingKey=job.f9ff25f9-6ac8-489b-ad8e-73c421f8b119)] com.knime.enterprise.server.executor.msgq.StatusMessageHandler.updateJob Job: TestWorkflow 2024-04-25 16.58.53 (f9ff25f9-6ac8-489b-ad8e-73c421f8b119) of owner victor finished with state EXECUTION_FAILED

25-Apr-2024 16:59:00.749 INFO [https-jsse-nio2-8443-exec-7] com.knime.enterprise.server.executor.msgq.RabbitMQExecutorImpl.doSendGenericRequest Sending generic message to job '/TeamA/TestWorkflow (TestWorkflow 2024-04-25 16.58.53; f9ff25f9-6ac8-489b-ad8e-73c421f8b119)' via executor group 'knime-jobs', executor '01f57cec-85de-496f-81d2-b24548e8f47e@node003.clearpeaks.com'

25-Apr-2024 16:59:11.277 INFO [KNIME-Job-Lifecycle-Handler_1] com.knime.enterprise.server.jobs.WorkflowJobManagerImpl.discardInternal Discarding job '/Groups/tst_dev_application_knime_AP1_User/TestWorkflow (TestWorkflow 2024-04-18 16.54.05; f4660d17-5fbf-4440-a418-73772b6e86a6)' (UUID f4660d17-5fbf-4440-a418-73772b6e86a6)

25-Apr-2024 16:59:11.981 INFO [KNIME-Executor-Watchdog_1] com.knime.enterprise.server.util.ExecutorWatchdog.scheduleThreads Checking for vanished executors in 100000ms

Figure 2: Portion of a KNIME Server Log during a job execution failure (notice the highlighted log line ending with EXECUTION_FAILED)

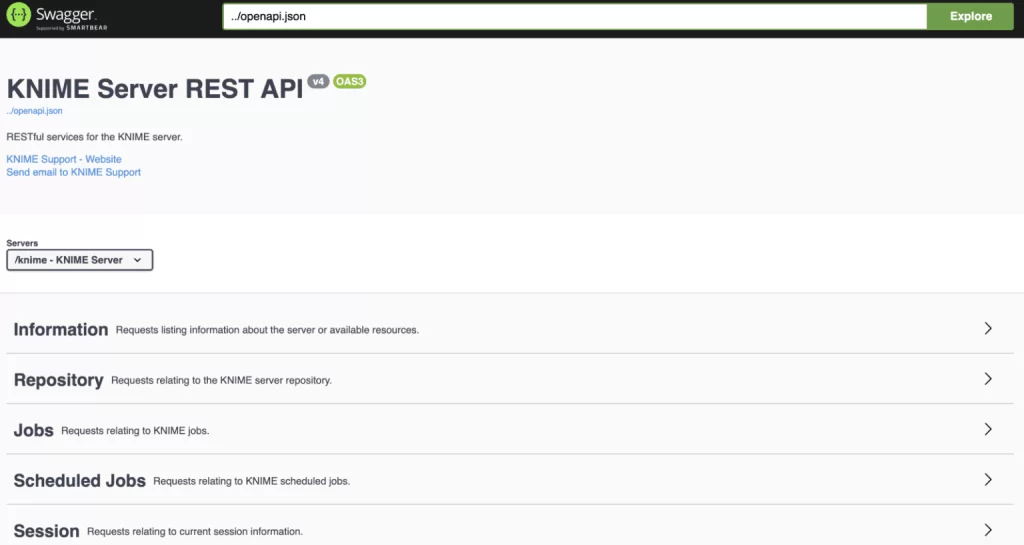

Once the job ID has been retrieved and shared with the main thread of the Python application, it is used to perform three API calls to the KNIME Server REST API to obtain all the job and workflow information:

GET https://<serverurl>:<port>/knime/rest/v4/jobs/{job_id}

to retrieve job information. This information is stored in a JSON file called job-summary.json.

GET https://<serverurl>:<port>/knime/rest/v4/jobs/{job_id} /workflow-summary?format=JSON&includeExecutionInfo=true

to retrieve the workflow information. This information is stored in a JSON file called workflow-summary.json.

- GET https://<serverurl>:<port>/knime/rest/v4/repository/{workflow_path}:data

to download the workflow KNWF file which can be used to recreate the workflow by importing it. The file is stored with the name {job_id}.knwf; note that the KNWF file is actually a ZIP file.

Figure 3: KNIME Server REST API Swagger

The information retrieved from all the API calls is stored in the audit folder in the file system. For a KNIME Server deployment with High Availability (as is the case for our customer), we advise locating the audit folder in the same network share used as the KNIME repository, mounted as a file system in both KNIME Server nodes. The audit folder is organised by days, making it easy to apply retention policies by time if necessary. Each job execution is stored in a folder named using the job ID and a timestamp.

If the same job is executed twice, which is possible in KNIME, there will be two job folders with the same job ID but different timestamps. Moreover, to minimise the volume of the audit folder, the workflow KNWF file is unzipped, and unnecessary files are removed to recreate the workflow with the exact same configuration. Any intermediate data is also wiped during this process:

[root@node003|development|logs]# cd /knime/knimerepo/audit/ [root@node003|development|audit]# ls 20240416 20240418 20240424 20240425 [root@node003|development|audit]# cd 20240425/ [root@node003|development|20240425]# ls 2da5bf15-57ca-487f-99cc-9e67f9136be1-20240425145128 ca044773-bc26-4584-9c80-6c80c2585f6d-20240425095001 67c55db2-39e9-483f-90e7-9acfb952df07-20240425095103 f9ff25f9-6ac8-489b-ad8e-73c421f8b119-20240425165900 9b21efa4-2b70-42b5-8206-419c16c5b32b-20240425145215 [root@node003|development|20240425]# cd f9ff25f9-6ac8-489b-ad8e-73c421f8b119-20240425165900/ [root@node003|development|f9ff25f9-6ac8-489b-ad8e-73c421f8b119-20240425165900]# ls f9ff25f9-6ac8-489b-ad8e-73c421f8b119.knwf job-summary.json workflow-summary.json

Figure 4: Example of content stored in the audit folder

As well as the cleaning mentioned above, we also process all the settings.xml files within the KNWF to read the ‘paths’ entry of nodes such as CSVReader to extract the datasets being accessed in each workflow node. This allows the administrator to quickly identify which datasets are being queried by each user:

[root@node003|development|f9ff25f9-6ac8-489b-ad8e-73c421f8b119-20240425165900]# unzip f9ff25f9-6ac8-489b-ad8e-73c421f8b119.knwf Archive: f9ff25f9-6ac8-489b-ad8e-73c421f8b119.knwf creating: TestWorkflow/ creating: TestWorkflow/.artifacts/ creating: TestWorkflow/CSV Reader (#3)/ creating: TestWorkflow/Credentials Configuration (#2)/ creating: TestWorkflow/Kerberos Initializer (#1)/ creating: TestWorkflow/tmp/ inflating: TestWorkflow/workflow.svg inflating: TestWorkflow/workflow.knime inflating: TestWorkflow/workflowset.meta inflating: TestWorkflow/Kerberos Initializer (#1)/settings.xml inflating: TestWorkflow/Credentials Configuration (#2)/settings.xml inflating: TestWorkflow/.artifacts/workflow-configuration-representation.json inflating: TestWorkflow/.artifacts/workflow-configuration.json inflating: TestWorkflow/CSV Reader (#3)/settings.xml

Figure 5: Example of unzipping a cleaned KNWF file in the audit folder

Finally, an audit event constructed from the extracted information via the API calls is sent as an XML to an ActiveMQ queue. The information sent contains the user ID who executed the workflow, the host where the KNIME Server is running, the status of the workflow (success or fail), the job ID, the execution timestamp, the dataset paths accessed in nodes such as CSVReader, the error message if the execution failed, and the path within the audit folder where auditors and administrators can find more information about the job, including the KNWF file for recreating the workflow for this specific job execution:

2024-04-25 16:59:00,636 knime_audit INFO Encountered job f9ff25f9-6ac8-489b-ad8e-73c421f8b119

2024-04-25 16:59:00,637 knime_audit INFO Processing job: f9ff25f9-6ac8-489b-ad8e-73c421f8b119

2024-04-25 16:59:00,903 knime_audit INFO Storing f9ff25f9-6ac8-489b-ad8e-73c421f8b119 information in: /knime/knimerepo/audit/20240425/f9ff25f9-6ac8-489b-ad8e-73c421f8b119-20240425165900

2024-04-25 16:59:00,977 knime_audit INFO Extract knwf files into: /knime/knime_audit/temp_wf/f9ff25f9-6ac8-489b-ad8e-73c421f8b119

2024-04-25 16:59:00,980 knime_audit INFO Processing all settings.xml to extract paths

2024-04-25 16:59:00,988 knime_audit INFO Remove temp folder /knime/knime_audit/temp_wf/f9ff25f9-6ac8-489b-ad8e-73c421f8b119

2024-04-25 16:59:00,989 knime_audit INFO Send audit info for job f9ff25f9-6ac8-489b-ad8e-73c421f8b119

2024-04-25 16:59:01,003 knime_audit INFO Establishing SSL connectivity with AMQ

2024-04-25 16:59:01,019 knime_audit INFO Connection established

2024-04-25 16:59:01,033 knime_audit INFO Send audit:

<?xml version="1.0" encoding="UTF-8" standalone="yes"?>

<auditEventList xmlns="http://www.clearpeaks.com/AuditEvent">

<AuditEvent>

<actor>

<id>victor</id>

<name>victor</name>

</actor>

<application>

<component>KNIME Server</component>

<hostName>node003.clearpeaks.com</hostName>

<name>KNIME</name>

</application>

<action>

<actionType>EXECUTION_FAILED</actionType>

<additionalInfo name="jobId">f9ff25f9-6ac8-489b-ad8e-73c421f8b119</additionalInfo>

<additionalInfo name="errorMessage">Execute failed: Invoking Kerberos init (kinit) failed.</additionalInfo>

<additionalInfo name="paths">/knime/data/test.csv</additionalInfo>

<additionalInfo name="audit_path">/knime/knimerepo/audit/20240425/f9ff25f9-6ac8-489b-ad8e-73c421f8b119-20240425165900</additionalInfo>

<timestamp>2024-04-25T16:59:00.638955+02:00</timestamp>

</action>

</AuditEvent>

</auditEventList>

2024-04-25 16:59:01,035 knime_audit INFO Accepted message

Figure 6: Portion of KNIME Auditing Version 2 log during the auditing of failed job. Note that the log shows the content of the XML sent

The XML is sent via the ActiveMQ Qpid protocol using the Python qpid-proton client, ideal for sending messages to an AMQ queue, even if the server is configured with high availability and requires SSL for connectivity.

KNIME Audit Version 2 is easily configurable via a JSON configuration file. The code for this new solution, along with all necessary instructions, can be found in this GitHub repository. It is designed to run as a background Linux service on your KNIME Server machines.

Conclusion

In this blog post, we have presented an approach to auditing jobs executed on KNIME Server (specifically on the KNIME Executors controlled by the KNIME Server) using its REST API and KNIME Server logs. This solution replaces the previous version and is both easier to use and to maintain, whilst also avoiding the flooding of the auditing system with numerous events by providing less but more valuable information. It also protects the auditing information in case jobs are deleted, and even offers the possibility of recreating the entire workflow with the exact configuration used during its execution.

As readers familiar with KNIME might know, any KNIME Server deployment will eventually be replaced by KNIME Business Hub. Note that the auditing solution presented here is not yet compatible with KNIME Business Hub because it relies on the KNIME Server logs, which are not present on KNIME Business Hub. However, with a few minor modifications (to adapt to KNIME Business Hub logging and its REST API), the solution should also work in that new offering.

Here at ClearPeaks we pride ourselves on our extensive experience in implementing tailored solutions that meet our customers’ exact standards and needs. Our team is adept at navigating and adapting to the ever-evolving landscape of data technologies, ensuring that our solutions remain cutting-edge and effective. Don’t hesitate to contact us if you have any questions or if you find yourselves facing a situation similar to that described in this blog post. Our experts are ready to provide the support and insights you need to achieve your data management goals!