02 Apr 2025 Achieving Peak Performance in Apache NiFi: Health Checks & Optimisation Strategies

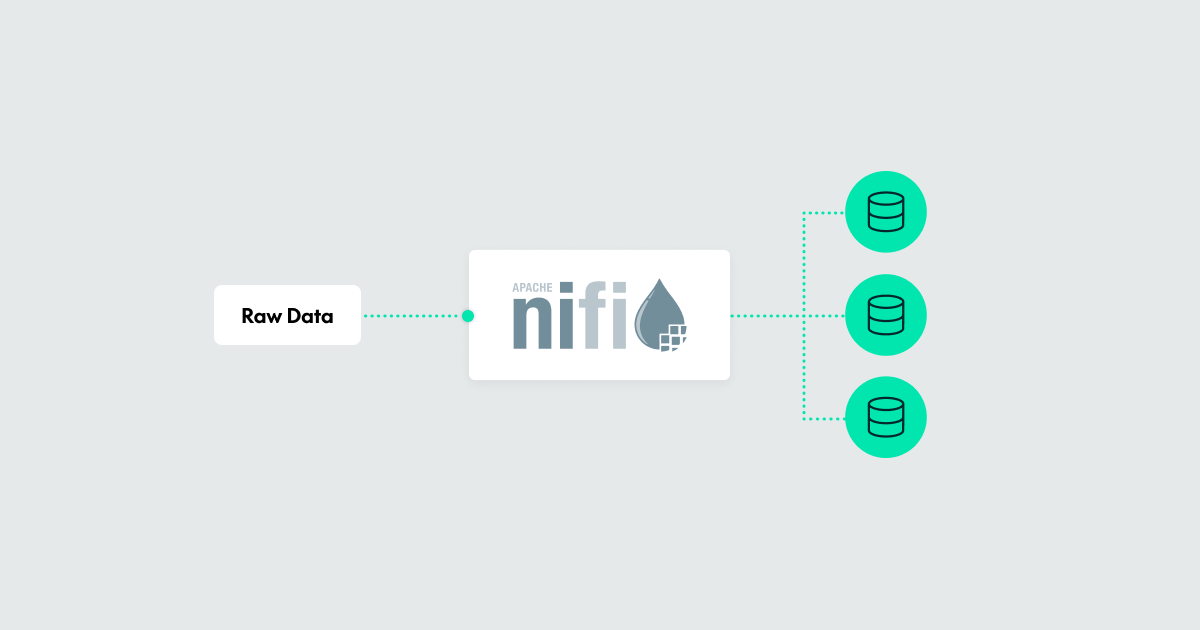

Apache NiFi has become the go-to solution for building and managing data pipelines, providing flexibility, scalability, and ease of use. In some of our previous blog posts we explored various use cases with NiFi, such as Handling Schema Drift with Apache NiFi and Using the NiFi API to Start and Stop NiFi Processors from a NiFi Flow.

Having worked with countless customers to implement pipelines in their NiFi environments, it would be easy to conclude that NiFi is the best in the business when it comes to data movement tools. However, ensuring its optimal performance and long-term health requires a proactive approach, and this is where a lot of organisations end up struggling in the long run.

In this blog post, we’ll share some of the strategies we use for health checks and performance optimisations to ensure that NiFi deployments work perfectly, whilst avoiding common errors and imperfect designs.

Understanding NiFi’s Architecture and Resource Management

It is extremely important to understand that NiFi’s architecture, and key components such as the repositories, JVM configurations, data frequency, and FlowFile size, contribute significantly to the overall performance of the NiFi flows you develop.

For instance, the performance of a NiFi pipeline that ingests large amounts of data and runs in-flow transformations depends heavily on the FlowFile, Content, and Provenance repositories: each plays a decisive role in system operations. For efficient performance, these repositories should be placed on separate high-speed storage devices. For example, with one of our telecom customers, isolating the repositories on dedicated SSDs eliminated I/O bottlenecks, enabling the processing of terabytes of data with minimal delays.

Neglecting repository maintenance is a common issue in underperforming setups. Allowing the Provenance or Content repositories to grow unchecked not only degrades performance but also increases the risk of system crashes. In some scenarios, automating repository clean-up helps to maintain consistent performance whilst complying with data retention policies.

Performance Tuning at the System Level

Proper JVM tuning is essential for stable performance. For one of our customers, increasing the heap size from 4 GB to 16 GB and enabling G1 Garbage Collection (-XX:+UseG1GC) reduced latency and ensured smooth operation under memory-intensive loads.

Incorrectly configured back-pressure thresholds can also lead to performance issues. Setting thresholds too high risks exhausting system resources, whilst overly conservative limits cause unnecessary halts. Optimising these settings can allow, for example, a sales analytics pipeline to handle surges in data traffic without interruption.

In clustered setups, distributing tasks across nodes means efficient resource utilisation. Overloading a single node leads to bottlenecks and can also destabilise the entire cluster. For another customer, balancing workloads across all nodes by applying queue load balancing strategies reduced processing times during peak events.

Error Handling and Monitoring

Effective error handling is key to building performant NiFi pipelines, as poor handling not only complicates troubleshooting and delays issue resolution, but also impacts performance, particularly when FlowFiles become stuck in limbo and continue consuming resources due to an overlooked or poorly designed error-handling mechanism.

Effective error handling involves configuring processors to manage failures and to maintain a steady data flow. Key strategies include:

1. Processor-Level Error Handling

- Retry and Backoff Settings: Configure retry mechanisms and backoff intervals for processors to handle temporary failures as appropriate.

- Failure Relationships: Use the Failure relationship to route failed FlowFiles to dedicated processors like LogAttribute (for logging error details) or PutFile (to store error logs persistently). Do not leave Failure relationships unconnected.

2. Error Monitoring and Alerting

- Use the MonitorActivity processor to detect inactivity and to trigger alerts.

- Integrate with external systems like email notifications via the PutEmail processor, or third-party monitoring platforms for comprehensive alerting and error tracking.

We have applied numerous other error-handling techniques tailored to our customers’ policies, helping them to proactively manage failures, log errors, and maintain a NiFi data flow that is resilient and responsive to issues, without compromising performance.

Designing Efficient Data Flows

Efficient flow design is a cornerstone of system performance in NiFi, and a well-structured flow ensures optimal resource utilisation and facilitates troubleshooting, scaling, and flexibility. If your NiFi environment is well equipped and configured perfectly by the admins, badly designed flows can still degrade overall system performance.

Here are some key considerations and tips for designing high-performance NiFi flows:

- Multi-Threading in Processors: Excessive reliance on single-threaded processors in high-throughput pipelines limits parallelism. Transitioning these to multi-threaded configurations, as seen in a telecom data pipeline, improves throughput significantly without increasing resource demands.

- Modularise Complex Workflows: Break down large, complex workflows into smaller, manageable sub-flows. This approach simplifies troubleshooting, helps to isolate performance bottlenecks, and improves scalability.

- Leverage Record-Based Processors: When dealing with structured data, it’s advisable to use record-based processors like ConvertRecord, QueryRecord, and UpdateRecord. These processors allow you to handle data in record formats (e.g., Avro, JSON, Parquet), as well as providing greater flexibility in structured data processing.

- Stay Updated with the Latest Processors: NiFi frequently updates its list of processors to introduce new features and optimisations. It’s important to use the latest versions of processors like ExecuteSQL, PutDatabaseRecord, or GetFile, as they often include performance improvements, bug fixes, and support for newer data formats. In one telecom deployment, using the latest version of PutDatabaseRecord meant more efficient interactions with relational databases, leading to faster data ingestion and fewer processing errors.

- Avoid Synchronous, Long-Running Operations: NiFi is designed for high-throughput, distributed data processing. Avoid using processors like ExecuteScript or ExecuteStreamCommand for long-running operations or synchronous processes, as they can become performance bottlenecks. Where possible, use asynchronous or batch processing mechanisms, and consider offloading long-running operations to external systems or services.

- Utilise Prioritised Queues and Load Balancing: For high-volume data pipelines, prioritised queues ensure critical data is processed first. Implementing load balancing across processors prevents overloading individual components and maintains consistent throughput.

- Batch processing: Batch processing plays a critical role in performance optimisation. In one case, increasing batch sizes for ConvertRecord processors during JSON transformations reduced data conversion times by 20%. Conversely, failing to partition flows effectively can lead to congestion. Partitioning based on attributes, such as data priority or type, streamlined processing in a logistics pipeline handling millions of FlowFiles per day.

By following these design principles (and many more we haven’t detailed here), our customers’ NiFi flows have become more efficient, maintainable, and scalable, ensuring uniform data processing even as workloads and data sources grow and evolve.

Conclusion

Implementing these best practices and avoiding imperfect designs leads to resilient NiFi deployments capable of scaling according to evolving business needs. Regular health checks, processor optimisation, and proactive maintenance form the basis of a reliable system. By employing these practices and others, we have helped our customers both to stabilise their NiFi environments and to get optimal performance for high-throughput, resilient flows.

If you’d like further information about our health check programme and how to optimise your NiFi or Cloudera Data Flow environment, our expert consultants here at ClearPeaks will be happy to help you. Simply get in touch today!